A or B? – That is the question

The reality is that the majority of us haven’t heard about A/B tests, despite regularly participating in them.

In this article Diana Ring, specialist of the a1qa Agile Testing Department, describes the main principles of A/B testing and QA engineer’s role in getting true-to-life test results.

A/B testing is a marketing tool used to evaluate and enhance efficiency of a web resource. To get better understanding of the notion, it’s vital to answer two questions:

- What is the main objective of A/B testing?

- How is it conducted?

Let’s imagine: we own an online store. And one day we’ve decided to introduce some changes in the user interface to get a significant gain in sales. We may want to highlight the BUY button and make it red assuming that it’ll become more visible to the customer. However, our decision is a mere hypothesis that has to be backed up with numbers. Seemingly, the target audience may disagree with us. To get the desired effect, we need to prove our hypothesis with facts. This can be achieved by running A/B tests.

The key goal of A/B tests is to attract more users and maximize the outcome of interest.

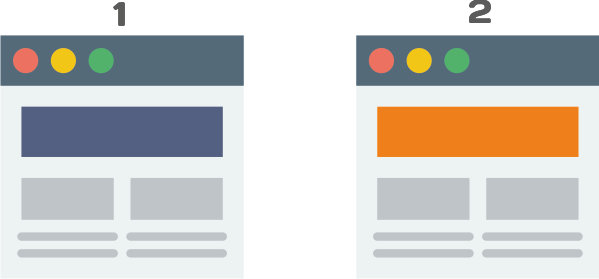

The goal is achieved by creating variations of experimental pages. As the name implies, we take the original page (A) and modify it somehow, thus getting a B version of the page. Then the user’s behavior on both of the pages is compared. In such a way, the users unconsciously vote for the better variation.

A/B tests may be monovariant (split) ones – when the two variations of the page differ only in one element (button color, title of a section or font). As for the rest, the pages should look the same.

There is also multivariate testing when the pages differ in a number of ways. The total number of created variations may be up to four.

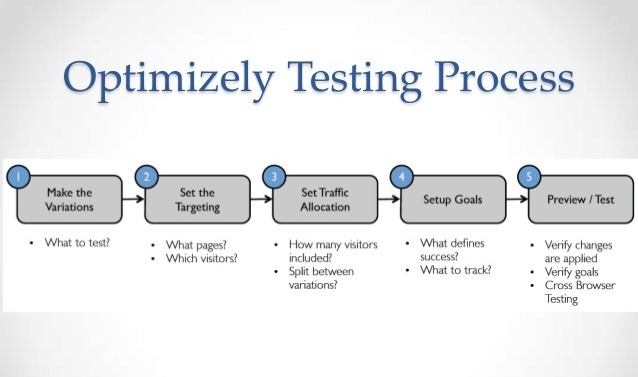

A/B testing process

- First the hypothesis is generated. You assume that some modification will be better than the current version and will increase website or online store visitor-to-lead conversion rate. Conversion rate is the proportion of visitors to a website who take action to go beyond a casual content view or website visit.

- Based on the hypothesis, experimental versions of the page are created. It may be done with the help of external A/B testing services that allow for creating and editing website pages.

- All website visitors are randomly divided into groups. The number of groups should be equal to the number of variations. Every group is assigned to see only one variation. The traffic is also allocated in a random manner. Obviously, one user shouldn’t view different variations of the page. To ensure this, the users should be identified (using cookies, for example). Sometimes the test page may be shown within a specific segment of the customer base. In this case the segmentation approach is applied to include multiple customer attributes – for example, age and gender – to get more accurate test results. The test usually runs for a couple of weeks.

- During the test run, the metrics are gathered and the conversion rate is measured for every page variations. The conversion rate will be a total number of target actions completed. Every project will have its own target action. For the e-commerce website it will be a product purchase, whereas for other projects it may be registration completion, e-mail signups, clicking a banner advertisement, etc. But in all cases, conversion rate will determine the variation that performs better.

- Users’ interaction with every variation is measured and analyzed. Based on the results of the data collected, it’s possible to determine whether the change had a positive, negative or no effect at all. The variation bringing positive effect should further be implemented on the website.

This is the step-by-step algorithm of running A/B experiments. What is the software testing engineers’ role in this process?

Immediately after the variations creation, the tests will run in the testing environment and QA engineers will verify the functionality of every variation, its compliance with the website design, functional requirements and the accuracy of data collection.

How can the A/B tests be optimized?

To run A/B tests and make a sound decision, there are a number of tools available online. The most popular of them are the following:

- Google Analytics Content Experiments

- BestABtest

- Visual Website Optimizer

- Unbounce

- Evergage

- Optimizely

Using A/B testing software allows for creation of test pages, making the desired changes, setting up tests, allocating the website traffic and collecting the relevant data.

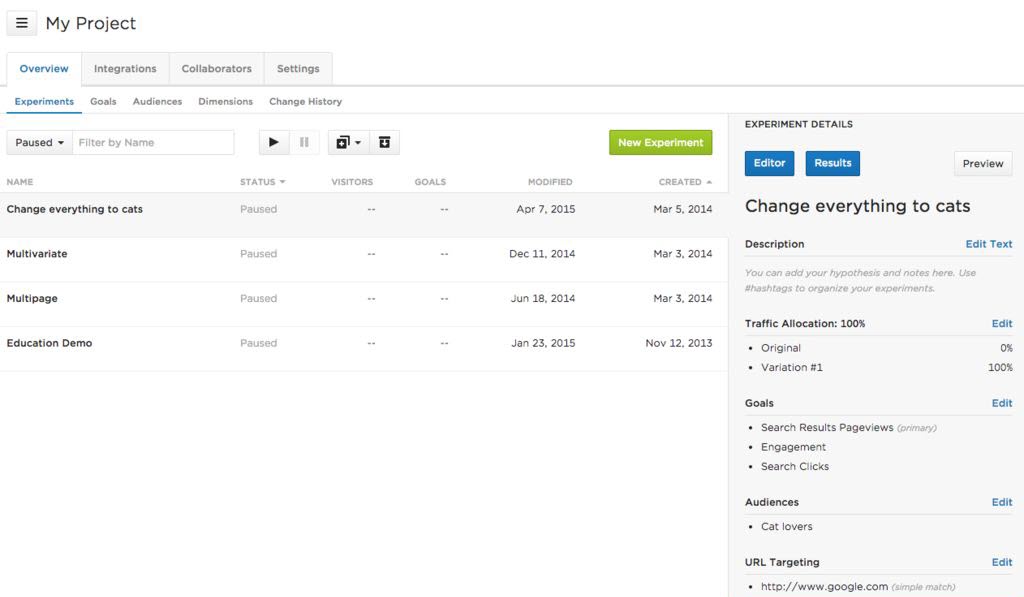

Let’s look closer at Optimizely – easy-to-use platform for running split and multivariate A/B tests. It allows for target audience segmentation, new experiments creation and gathering experiment results.

On the main page there’s a list of all currently active A/B tests, number of users interacting with the test and data of its launch and modification.

In test description there is information on how many experiments have been launched, traffic allocation, tests goals and target audience. The goals may be different: action completion (adding the product to the shopping cart), achieve the set session length, total number of purchases or just clicking the link. During the test runs we, testing engineers, should verify the proper data collection.

To check all A/B variations, we should edit the traffic allocation. To do this, we assign 100% of users to see the page A, then page B, etc.

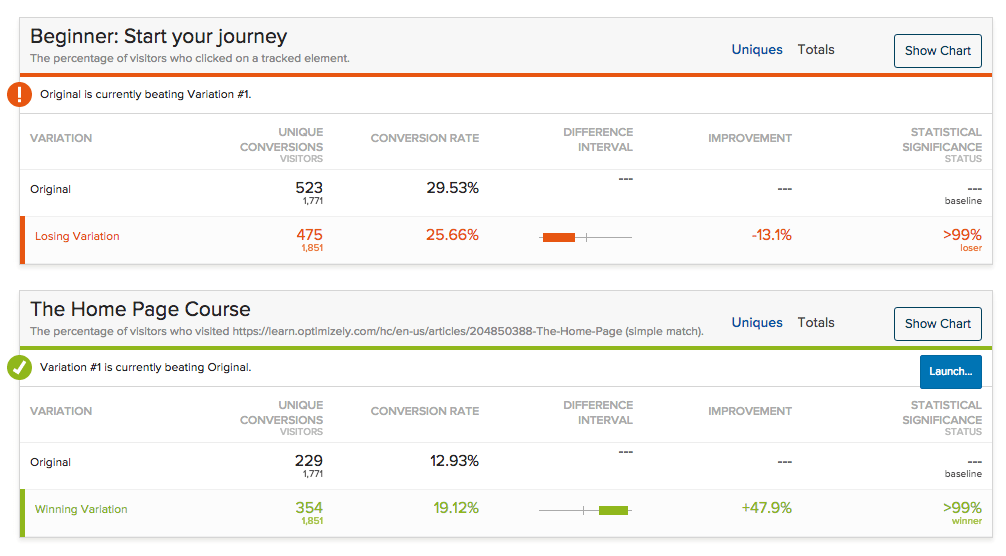

The Optimizely page with test results displaying the losing and winning variations will look as follows. The conversion rate will also be calculated here.

Finally, it’s worth saying that it’s not that easy to predict the users’ behavior on the website. Running A/B tests may be very helpful as it helps check any hypothesis. And there’ll be no need to argue over the page modifications. Kick off the experiment and wait for your customers to participate.

If your variation is a winner – great! Now see if you can apply learnings from the test on other pages of your website. If the test has generated a negative result, don’t panic. Use the experiment as a learning experience and draw conclusions.

You may try to run the A/B tests on your own or address experienced QA company who’ll complete the test, analyze the results and present you the data of the experiment.

Test and convert your visitors into customers!