Benchmark testing of Raspberry Pi operating systems

The Raspberry Pi is a credit-card-sized single-board computer developed in the UK by the Raspberry Pi Foundation to promote studying basic computer science at schools. Raspberry Pi was first introduced as a prototype in late 2011 as a tool to teach and learn programming.

Most buyers, once they get their hands on the new RPi, follow the getting started instructions on the Raspberry Pi site running recommended Raspbian proprietary OS. Kano OS is a fork of Raspbian OS, a Debian Linux Distro. It’s an operating system designed for simplicity, speed, and code learning, targeted at new Raspberry Pi users. It dynamically adjusts the Raspberry Pi’s clock-speed when load reaches 100.

Still, there’s a wealth of other operating systems available on the market. But the more alternatives, the harder to choose. Thus, the preferences should be defined and then compared. QA consulting comes to help with this – below, we provide our benchmark on the three operating systems for Raspberry Pi based on our comprehensive testing.

How the benchmark was performed

We selected three Operating Systems Raspbian OS, KANO OS, Pidora OS and conducted different benchmark tests to define how performance characteristics of these operating systems varied.

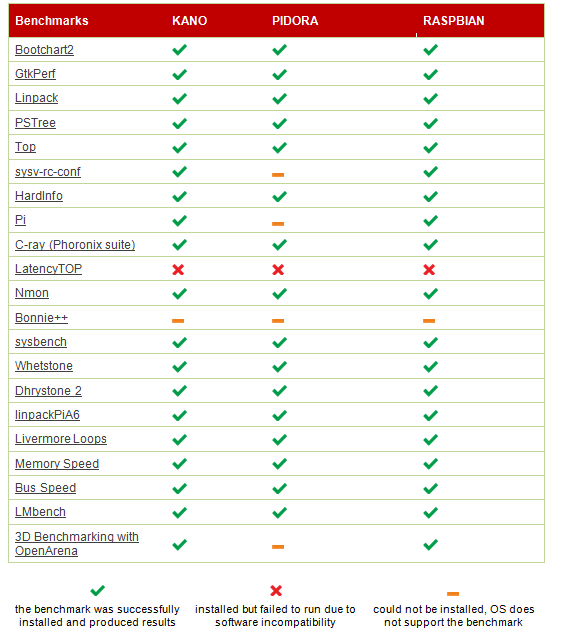

21 different tests were run, and the results were compared and analysed based on the benchmarks` characteristics.

The following characteristics or measurements were considered:

- Each test was launched in the same system state.

- No other functions or applications were active in the system unless the scenario included some activity running in the system.

- Launched applications used memory even when they were minimized or idle, which could increase probability of garbling the results.

- The hardware and software used for benchmarking match the production environment.

- Three identical boards were used with Kano OS Beta 1.0,2 , Pidora 2014 (Raspberry Pi Fedora Remix, version 20) and Raspbian Debian Wheezy (version January 2014) operating systems, installed on SD cards.

- Benchmarks were launched via commands in Terminal, where real-time activities and results were displayed.

Benchmarks used

Below is a list of all Benchmarks used and the information on successful or failed launch.

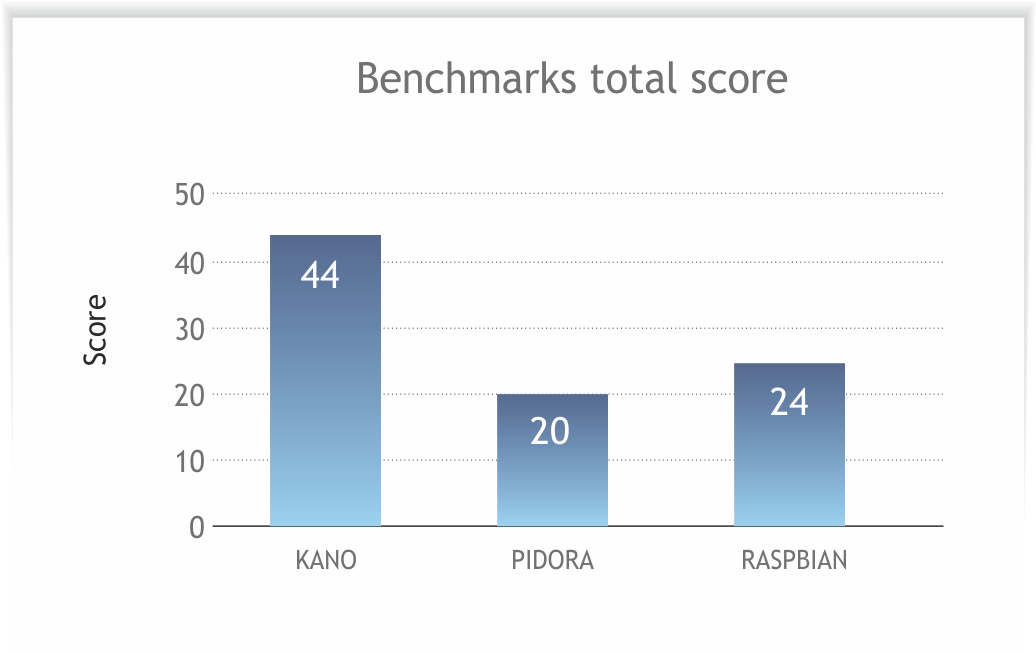

Final OS benchmark score

Not all operating systems had success in launching some of the benchmarks and several benchmarks were not objective (PSTree, Top, HardInfo, sysv-rc-conf), so the score can be considered an approximate one.

Overall, Kano OS outperformed Pidora OS and Raspbian OS.

The measurements are approximate and are not 100% scientifically correct. Still, we intended to get a rough idea of how the systems perform. The performance benchmarks and the values shown here were received using particular well-configured and carefully installed systems. All performance benchmark values are provided “AS IS” and no warranties or guarantees are given or implied by a1qa. Actual system performance may vary and is dependent upon many factors including system hardware configuration, software design, and configuration.

Full report with test results data and benchmarks descriptions can be provided upon your request.