How many companies have you heard of that have successfully navigated their digital transformation journey? It’s a challenging process, filled with tough questions along the way. These organizations reimagine IT strategies, introduce innovations, and apply novel approaches to manage both business and operational processes.

According to Gartner, 91% of companies are actively pursuing digital initiatives, highlighting substantial investments in digital transformation efforts to achieve success.

In the banking and financial sector, digital transformation involves adopting technologies like AI, cloud computing, blockchain, and APIs to optimize operations, ensure real-time services, and break down data silos. Banks are shifting to cloud-based platforms for greater flexibility and scalability, while AI and data analytics enable fraud detection and tailored product recommendations. Open banking frameworks, enhanced security measures, and Internet of Things (IoT)-driven innovations are further driving this shift.

Banks are making these changes to eliminate inefficiencies, reduce operational costs, and boost productivity while delivering faster, more personalized solutions. For instance, Bank of America now processes more deposits via mobile channels than through physical branches. The adoption of new technologies allows banks to attract customers, enhance security, and compete with fintech players by offering digital-first products and services.

Despite that, only 16% of executives submit the successful digital transformation journey. What slows down the digitalization of other 84% of companies?

One of the barriers is a growing amount of cyberattacks. Ensuring data privacy and proper cybersecurity is a top priority of any company aiming to succeed in executing a transformation program.

However, cybersecurity is just one of many challenges that banks and financial institutions face.

The key challenges include:

- Data security: Protecting sensitive customer data from breaches and cyberattacks remains a major concern.

- Legacy systems: Many institutions still rely on outdated systems, which are difficult to integrate with modern technologies and hinder agility.

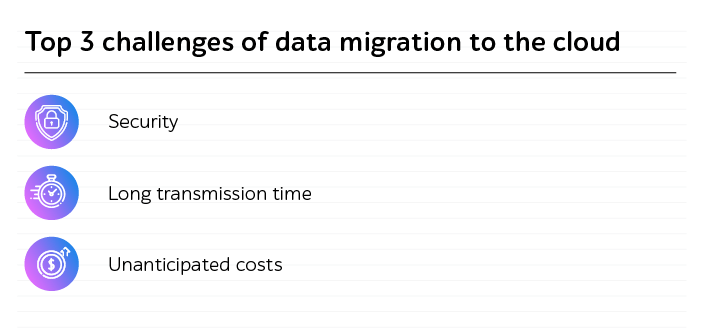

- Cloud adoption: Transitioning from on-premises systems to the cloud can be complex and risky, requiring careful planning to avoid disruptions.

- Data migration: Moving massive amounts of customer and operational data to the cloud or new systems presents both technical and operational risks.

- Software functionality and maintenance: Ensuring software runs smoothly without bugs is critical, as failures can result in significant financial losses.

- Scalability under load: Banking systems must perform efficiently under heavy transaction volumes, especially during peak usage periods.

- Introducing new products: Adding new banking and financial products requires not only new software solutions but also careful integration to avoid conflicts with existing systems.

In this article, we highlight 4 security challenges of digital transformation and QA activities that may help troubleshoot them.

Four security issues that hamper digital transformation

Within the current informational era, cybersecurity has been taken for granted. However, due to the swift migration to an online space and digitalization happening globally, companies are encountering an increased volume of cyberthreats.

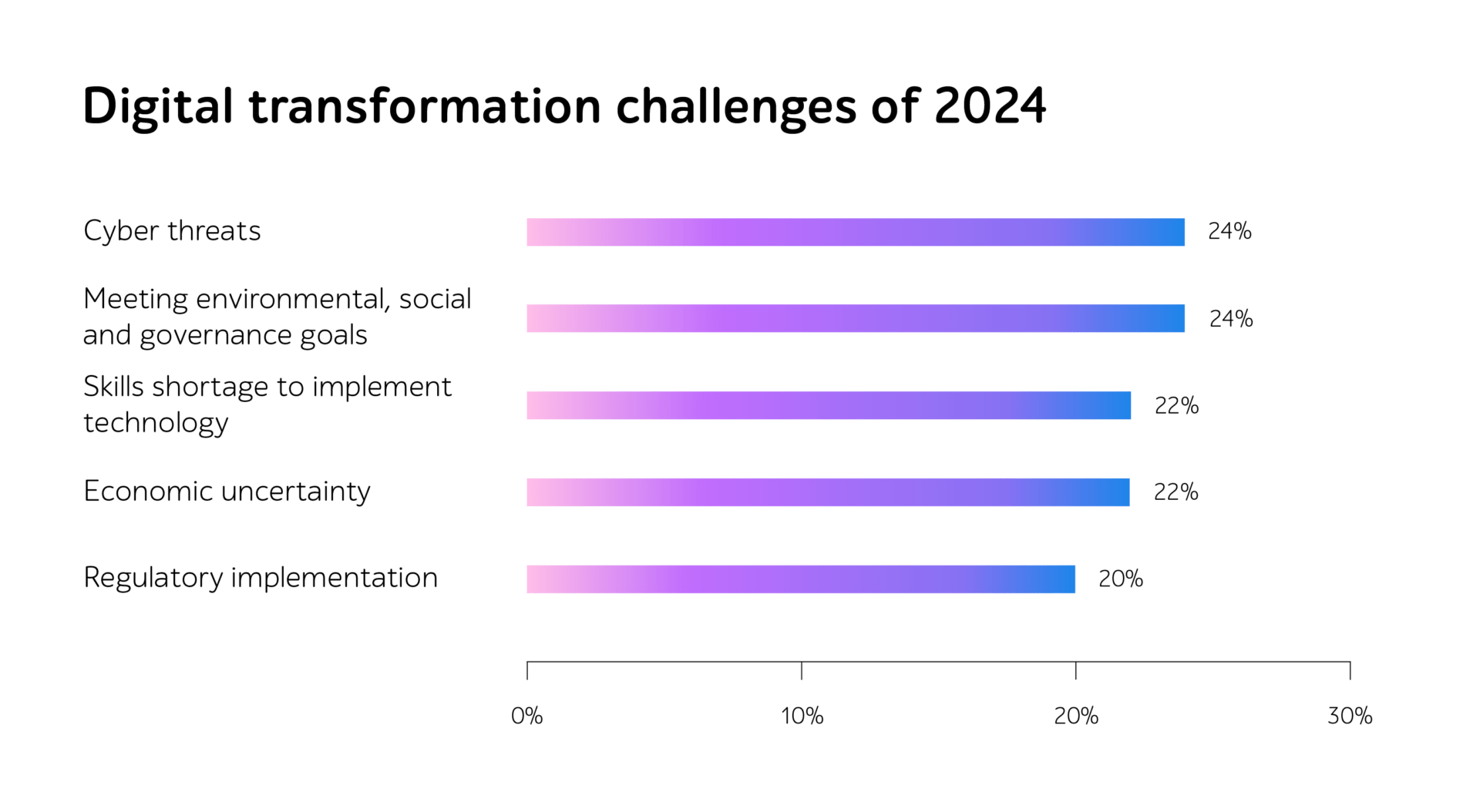

According to Veeam, IT decision-makers include cyberthreats (24%) in the list of issues that hinder digital transformation progress.

Source: Veeam

While undergoing digital transformation, securing web and mobile applications is critical, as they are prime cyberattack targets. Regular vulnerability assessments, penetration testing, and real-time monitoring help identify and mitigate risks before they impact users.

The rise of Open Banking heightens the need to secure APIs and data transfers. Strong encryption, robust authentication, and compliance with regulations like PSD2 safeguard sensitive financial data while ensuring seamless operations.

Data migration during digital transformation also requires secure protection. Encryption in transit, secure storage, and compliance checks reduce the risk of breaches during this process.

Protecting personal data is vital in finance, where breaches can lead to identity theft, fraud, and financial losses. Data masking, access control, and compliance with GDPR or CCPA are essential to protect customer information and maintain trust.

Finally, robust security frameworks prevent financial losses and ensure compliance. Proactively managing risks and maintaining transparency helps avoid fines, protect reputations, and enable faster, secure digital solutions.

Let’s figure out the top 4 security issues that need tackling to ensure smooth digital transformation.

Security issue #1. Tech evolution with the same safety level

IT infrastructures are steadily expanding by introducing novel technologies. For instance, cloud computing is the front-runner when it comes to delivering enterprise infrastructure.

Cloud security remains a critical concern as threats continue to rise. According to SentinelOne, 80% of companies have reported an increase in the frequency of cloud attacks.

In their Cost of a Data Breach Report 2024, IBM further highlights the risks, revealing that 40% of cloud data breaches involve data stored across multiple environments, with breaches in public clouds incurring the highest average cost of $5.17 million. These findings underscore the urgent need for enhanced security measures in increasingly complex cloud ecosystems.

With that, improved IT solutions in turn have a higher susceptibility to attacks, as these enlarged ecosystems broaden the scope of vulnerabilities while generating more possibilities for hackers.

Security issue #2. Sophisticated cyber incidents

Digital transformation also has a dark side of force. Alongside bringing value, innovations foster malicious actions by providing advanced tools, environments, and approaches to unauthorized apps usage.

For years, cyber attackers have been perpetually nurturing a malware arsenal, so that their behavior has become more unpredictable and thought-out. For now, detecting malusers and forestalling expensive system’s recovery after cyberthreats is rather complicated, as it requires a rock-solid strategy and ceaseless control.

Security issue #3. Overcomplicated cybersecurity standards

Being the most precious entity for any modern business, personal information needs high protection that triggers regulation actions. Within today’s growing intensity of cyberattacks, standards have become stricter and more regulated.

Compliance with cybersecurity standards is a complex and costly task. However, at a time when most banks’ board members are betting on large investments in emerging technologies, 81% of Chief Executive Officers (CEOs) in banks worldwide believe that cybercrime and cybersecurity will significantly hinder organizational growth over the next three years, according to the latest Banking CEO report by KPMG. Despite these concerns, implementing stringent security norms can provide long-term benefits by helping companies achieve certifications and deliver secure, high-quality software to the market.

Regulations that cover all life-threatening industries: HIPAA security checklist is for eHealth products, OWASP safety recommendations are for any-domain web and mobile apps, GDPR is for enabling secure data storage and transfer worldwide.

Security issue #4. Lack of the right-skilled people

While malicious users are constantly refining their skills, businesses don’t always have an appropriate volume of finances, experience, and right-skilled employees to address emerging cyberthreats.

With that, companies should gradually reimage budget allocation while keeping up with the relevant cybersecurity insights and providing advanced training for broadening expertise.

QA for safe digitalization

We strongly believe that prevention is better than the cure. Being prepared to respond to any security breach is not about being anxious but more about minimizing risks especially meanwhile the crisis. So, what actions may be of help to deal with security issues?

Welcome to the handbook to assist you in releasing highly secure IT products.

1. Strengthen security practices

The more business operations that are being brought to online, the more vulnerabilities and data breaches have gone up.

This is why cyber threats remain a critical concern for CIOs in 2024, as highlighted by Logicalis’ latest CIO Report. Despite advancements in cybersecurity, 83% of businesses experienced damaging hacks in 2023, leading to downtime, revenue losses, and regulatory fines. With rapidly evolving risks, including those driven by quantum computing, only 43% of tech leaders feel fully equipped to handle future breaches, emphasizing the urgent need for robust security measures alongside digital transformation efforts.

Starting from security assessments to controlling data protection at the go-live stage, businesses may get substantial value and minimize the risks of cyberattacks. After identifying drawbacks, engineers execute penetration testing while imitating hackers’ behavior to create real-life conditions and not to miss any critical defects.

2. Shift from DevOps to DevSecOps

DevSecOps is all about thinking ahead and projecting “How can I deliver the software in the market successfully?” even when you are on the requirements stage of SDLC. Which of course, is about the determination to automate as many processes as possible including security checks, audits, and others.

DevSecOps assumes a “security-by-design” approach based on the following aspects:

- Caring about data safety from the very start of an IT project

- Applying mechanisms that supervise the impact of newly added features on the overall software security

- Setting up internal safety defaults

- Separating responsibilities for various users

- Introducing several security control points

- Thinking over the actions in case of an app crash

- Performing audits of sensitive system’s parts

- And many others.

By considering these points, it is much easier to enable high data protection and become confident in users’ privacy.

3. Optimize security testing with automation and continuous security monitoring

Test automation is an escape solution to the escalating intensity and amount of cyberattacks. By automating security testing, specialists can swiftly perform checks and identify the attack. Besides, it helps increase overall efficiency on the project, accelerate time to market, reduce QA costs.

Moreover, companies are gearing towards implementing AI and ML in the QA processes. Their ability to define the roots of the attack and the system’s vulnerabilities allow for dodging expensive bug fixing after going live and data loss which includes the stealing of intellectual property. The results of express analysis delivered by AI and ML help prevent possible similar attacks and vulnerabilities in the future.

Summarizing

Ensuring data protection and a high level of cybersecurity is among the cornerstones of passing digital transformation.

Within emerging tech advancements, hackers are also nurturing their skills and becoming more adept by strengthening their strategies.

To be one step ahead, companies should consider reinforcing digitalization processes with thorough security testing, including right-skilled personnel, penetration checks, DevSecOps practices, and next-gen QA to guarantee the delivery of reliable and secure software in the market.

Contact a1qa’s experts to get professional QA support in enhancing cybersecurity level.