Migrating to the cloud is a strategic move for many organizations looking to leverage several benefits, including scalability, flexibility, and cost efficiency. However, this process is fraught with potential risks and challenges.

In this article, we’ll discover QA activities that help ensure seamless cloud migration.

Functional testing

Functional testing allows businesses to check whether the application (including all user interactions, features, and integrations) operates correctly in the new cloud environment.

The team uses predefined test cases to test each feature of the software. These tests should cover all aspects of the IT solution, including user inputs, workflows, and integration points with other systems.

As a result, companies release an IT product that performs its intended tasks without errors, ensuring a smooth post-migration.

Compatibility testing

The primary objective is to ensure that applications, databases, and other components integrate effectively with the target cloud environment. This requires thorough examination of software versions, dependencies, and configurations to identify any issues that might impede the transition. Integration testing is essential in this process, as it verifies that all systems and services work together seamlessly within the cloud infrastructure.

Before migration, organizations must confirm that their operating systems, middleware, and third-party services are compatible with the cloud platform. Beyond mere compatibility, it’s critical to ensure that these components integrate smoothly, allowing for effective communication and data flow between systems. By focusing on integration testing, organizations can address potential disruptions and ensure a successful transition to the cloud.

Performance testing

Performance checks help evaluate how well the software operates in the cloud environment. It involves assessing various performance metrics to guarantee that the app meets the required benchmarks and handles the expected load.

As part of performance testing, the QA team conducts:

- Load testing to simulate the expected user traffic and check how the IT solution performs under normal conditions.

- Stress testing to push the application to its limits and observe how it behaves under extreme loads.

- Scalability testing to assure that the system can scale up or down based on demand.

Thus, companies detect bottlenecks in advance and ensure that the migrated application can provide consistent performance, even during peak usage.

Security testing

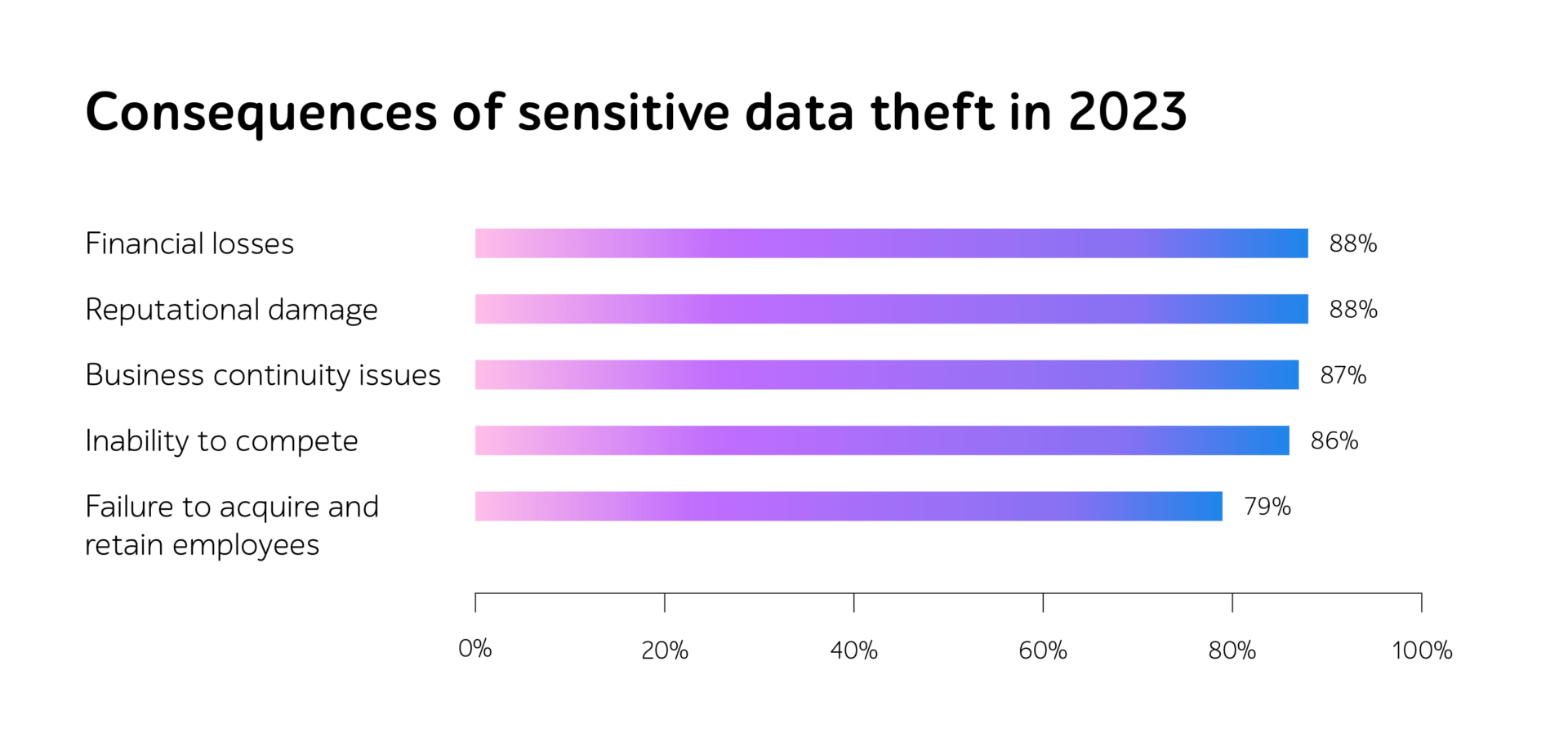

Businesses migrating to the cloud can face exposed sensitive data due to misconfigured access controls or cyberattacks exploiting unpatched software vulnerabilities. All leads to data breaches, regulatory fines, and severe reputational damage.

So, how to prevent such cases?

- Vulnerability scanning

This process involves using automated tools to scan the cloud environment for known vulnerabilities. These tools check for outdated software, misconfigurations, open ports, and other weaknesses that could be exploited.

By running a vulnerability scan, organizations can take corrective action before attackers have a chance to exploit them.

- Penetration testing

Penetration testing helps simulate real-world attacks on the cloud environment to identify security weaknesses. This involves attempting to breach the system using various attack vectors, such as SQL injection, cross-site scripting, and phishing. Addressing these vulnerabilities helps in enhancing the security posture of the cloud environment.

- Compliance checks

Ensuring that the cloud environment adheres to industry standards and regulatory requirements is business-critical, especially for organizations dealing with sensitive data.

With compliance checks, they verify that the cloud setup meets all requires standards, including GDPR, HIPAA, and PCI DSS. For example, a healthcare organization migrating to the cloud must ensure that their environment complies with HIPAA regulations to protect patient data.

Test automation

Relying solely on manual testing during migration presents significant challenges for QA specialists, such as managing an extensive scope, meeting tight deadlines, and mitigating the risk of human error. Manual testing can also be resource-intensive, requiring substantial time and manpower.

Certain types of testing, like performance and security testing, are particularly unsuitable for a manual approach due to their repetitive nature, large scope, and the need for frequent deployments, especially in CI/CD environments.

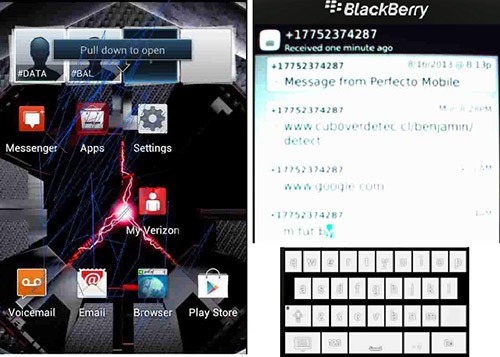

Introducing automated testing into the migration process addresses these challenges effectively. Automation accelerates QA workflows, improves test accuracy, and allows QA engineers to focus on more complex aspects of testing that require human judgment.

One key benefit of test automation is its ability to perform checks that are impractical to execute manually. For example, verifying that all data has been accurately transferred to the cloud database after migration would be time-consuming and error-prone if done manually. Automated testing can rapidly and accurately compare migrated data, ensuring its integrity in a fraction of the time.

Additionally, automation enhances test coverage by executing more test cases in less time, which is especially valuable for large and complex software systems. By leveraging automation, organizations can ensure a more thorough and efficient validation of the migration process

To wrap up

The cloud migration process presents several challenges for companies, but QA can help prevent issues, ensure comprehensive testing, and facilitate a smooth transition.

To achieve this, companies should conduct functional, compatibility, performance, security, and automated testing.

Need support with cloud migration testing? Reach out to a1qa’s team.