A study by Verified Market Research showed that the software outsourcing market has experienced significant growth and is projected to reach $897.9 billion by 2031. A dedicated team model (DTM) — widely used in the world of outsourcing — is one of the key solutions for businesses to manage such growth.

In this article, we will answer some common questions about this model and summarize some specific issues one should know before deciding on the DTM.

Curious? Let’s start.

What’s a dedicated team?

Just as with the time & material (T&M) or fixed price (FP), the dedicated team is the business model. Its essence lies in providing the customer with an extension of their in-house team. The scope of works, team structure, and payment terms are specified in the client-service provider agreement.

Deciding on this model enables the client to shift the focus on business-critical competencies by cutting expenses spent on management as well as searching, hiring, and training new QA specialists.

The team fully commits to the needs of the customer and the vision of business and product, ensuring alignment with long-term goals. Dedicated QA experts work closely with the development and product teams to ensure seamless communication and integration, timely identify potential risks, and apply best practices to ensure alignment with the highest standards. At the same time, the QA vendor provides its administrative support, monitors the testing environment and infrastructure, measures KPIs, and proposes improvements.

Before making a step toward DTM, you might ask yourself whether it is suitable in your particular case. Here are seven signs that you need a dedicated QA team:

- You aim to keep QA costs to a minimum.

- The project requirements are dynamic.

- You have no resources to train or manage your in-house QA team.

- Your project has significant scalability potential.

- You need to meet strict release deadlines and are looking for a team solely dedicated to upholding quality standards throughout accelerated development cycles.

- You transit toward Agile or DevOps methodologies and need QA experts equipped with expertise in test automation, continuous integration, and fast feedback loops.

- You are interested in building long-lasting relationships with a QA vendor.

Dedicated team model at a1qa

Vitaly Prus, a1qa Head of testing department has extensive experience in managing Agile/SAFe teams. He knows how to set up and maintain successful DTMs for both large corporations and startups.

Vitaly, is the DTM popular among a1qa clients?

It is. In fact, the DTM is the most popular engagement model at a1qa — about 60% of the ongoing projects are running this model.

DTM clients: who are they?

Traditionally, the model is more popular with North American and European clients. For example, a Canadian real estate company reached out to a1qa in search of a dedicated QA team to ensure the quality of its flagship software that required rigorous testing to meet diverse user needs.

a1qa’s specialists conducted functional, performance, localization, and compatibility testing, as well as test automation, to detect issues early, guarantee the system’s failsafe operation, and accelerate testing cycles. Additionally, a1qa implemented smart team scalability, dynamically adjusting the team size to align with workload fluctuations and optimize resource utilization throughout every stage of the project.

From my experience, customers choose dedicated teams when they want to extend their in-house crews but have no time to hire or no resources to train new QA talents.

Comparing to the fixed price or T&M models, the DTM is about the people, I would say. Applying for the dedicated team, most clients seek not just additional testing hands, but they rather want to get a pool of motivated specialists who will commit to the project, have the flexibility to adapt to changing business demands, stay proactive, and do their best to make the final solution just perfect.

And I can’t but stress it that the DTM is used mainly for long-term projects. For example, we currently maintain teams that have been providing software testing services for 5, 7, and 10 years.

For instance, a1qa assigned five dedicated QA teams to assist one of its clients — a global provider of on-premises and cloud solutions for cyber protection — in accelerating the development of new products and enhancing existing QA practices. The teams focused on core IT products, conducted functional and automated testing for multiple subprojects, provided actionable recommendations for improvement, and verified mobile applications.

To ensure seamless integration, a1qa also developed a comprehensive training program for new team members, equipping them with a strong understanding of technical features. Additionally, a QA manager was assigned to maintain consistently high performance among the dedicated specialists. As a result, the client reduced time to market and accelerated the release of complex software solutions. This successful partnership has now been ongoing for over nine years, reflecting the client’s trust in a1qa’s support.

The dedicated team is about dedication.

Could you list the top 3 main advantages for the client?

Besides the inherent commitment resulting from the model’s nature, I would highlight improved software quality through meticulous testing and cost and time efficiency achieved by identifying issues early and eliminating the need for extensive rework. At a1qa, we also provide smart team scalability by rapidly adjusting to the clients’ demands of expanding or decreasing the team size.

Our teams can work on-site at the client’s premises or operate remotely, as is the case for many of our customers.

We offer a vast pool of specialists with diverse tech skills and industry-centric expertise (continuous and shift-left testing experts, test automation specialists, security and performance testers, UX testers across telecom, BFSI, gaming, eCommerce, eHealth, and more). Our clients also get an independent software quality evaluation along with a variety of testing means and access to the latest technological achievements.

Drop us a line to discuss whether DTM is the right decision for your business.

What are the key factors for DTM success?

The success of any dedicated team depends on how well a service provider takes care of its resources and on the quality of the infrastructure and environment provided.

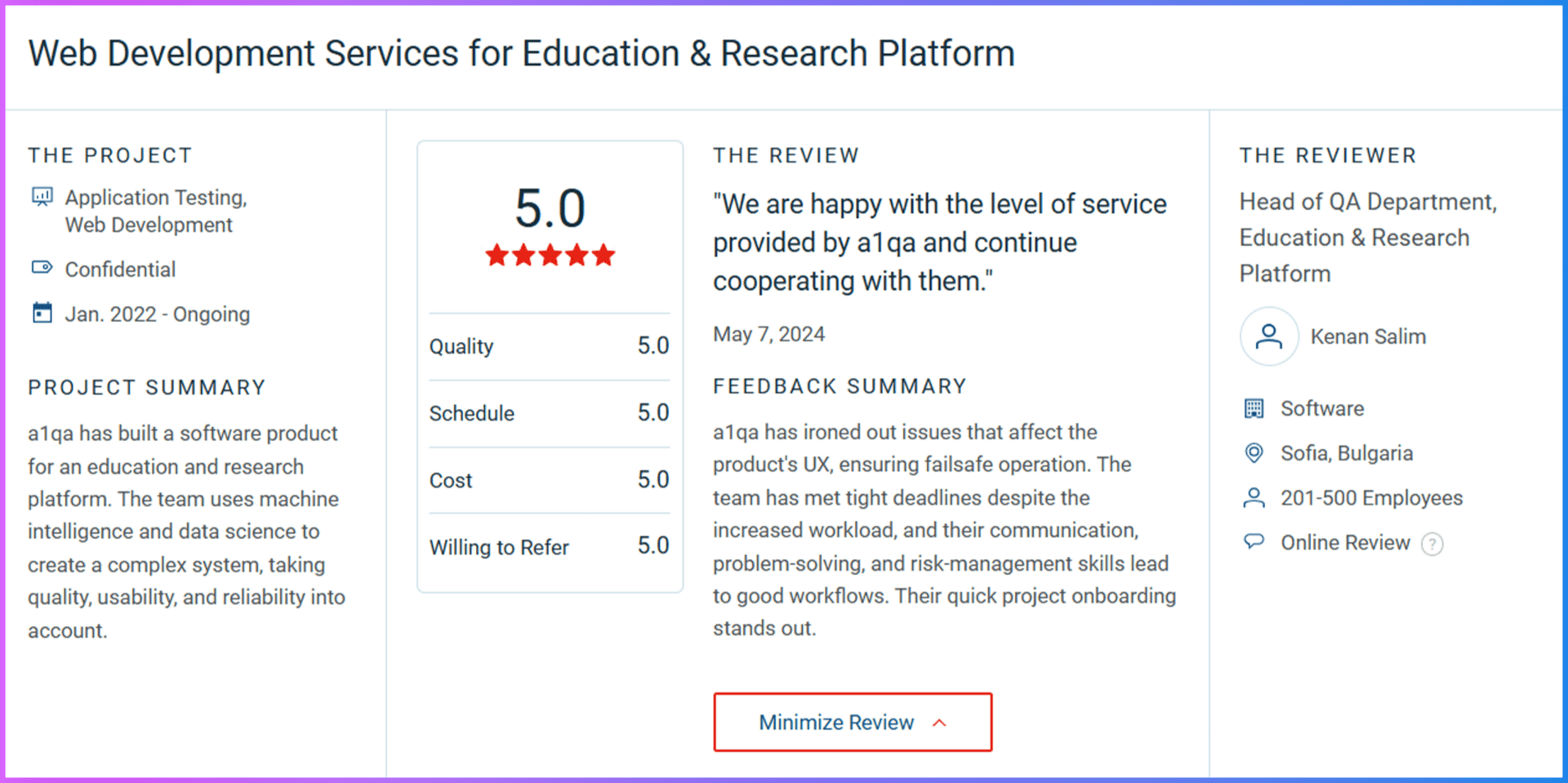

At a1qa, we’ve built a well-structured approach to set up dedicated teams considering all clients’ requirements. For instance, to help an innovator in advanced analytics and data usage automate QA, a1qa assembled a dedicated QA team able to not only speed up testing time in line with tailored automated QA files but create databases operating under the client’s requirements as well as reshape QA processes for improved efficiency. Our customers consistently praise the expertise and efficiency of our teams.

In addition, we continuously strengthen QA expertise and leverage innovations in proprietary R&Ds and Centers of Excellence. Our team members are willing and ready to get and expand QA knowledge in our QA Academy which provides a unique approach to the educational process. All of this aligns with a1qa’s culture of excellence, elevating our processes to the next level of quality.

Does it take long to set up the right team?

Some clients trust our project managers and pass the responsibility to us. Others are more attentive to this matter and take part in all interviews and check CVs of all possible engineers. On average, it takes from a week to a month to set up a team that will be ready to start.

If a team requires 10 engineers, we usually recommend assigning only 2-3 specialists at the very start and gradually expanding the team as the project grows. This is more effective than setting up a team of 10 software testers from the very beginning.

Additionally, at the client’s request we can assign a QA manager who will take control over QA tasks and activities to get the maximum value from the DTM.

We can also offer a try-before-you-buy option if a client is not sure that a proposed candidate fully meets their requirements. It’s like a test drive for QA specialists: if there are any doubts, clients have some time to form their opinion and decide whether to continue cooperation with the team member or not.

How can clients be sure that the team delivers the expected results?

At the start of the project, establish relevant metrics to track the team’s success. Use KPIs to ensure that skilled professionals deliver the required results on time.

As I mentioned earlier, you have full control over your team and can customize the management approach to your preference. Request daily stand-ups and regular status reports to align the communication process with your specific needs.

What is the billing process?

The dedicated team is paid for on a monthly basis and the pricing process is quite simple. The total of the bill depends on the team composition, its size, and the skillset.

When discussing the model with a client, we warn them about the downtime expenditures: if there happens to be downtime and the team has no tasks to perform, the client will keep paying for this time as well.

But as a matter of practice, QA and software teams rarely have idle time. Even if the team has technical issues blocking the testing work (e.g. test servers or defect tracking system on the client’s side is temporarily unavailable), they can proactively suggest and do some tasks useful for the project, like preparing test data files, etc.

Summing up

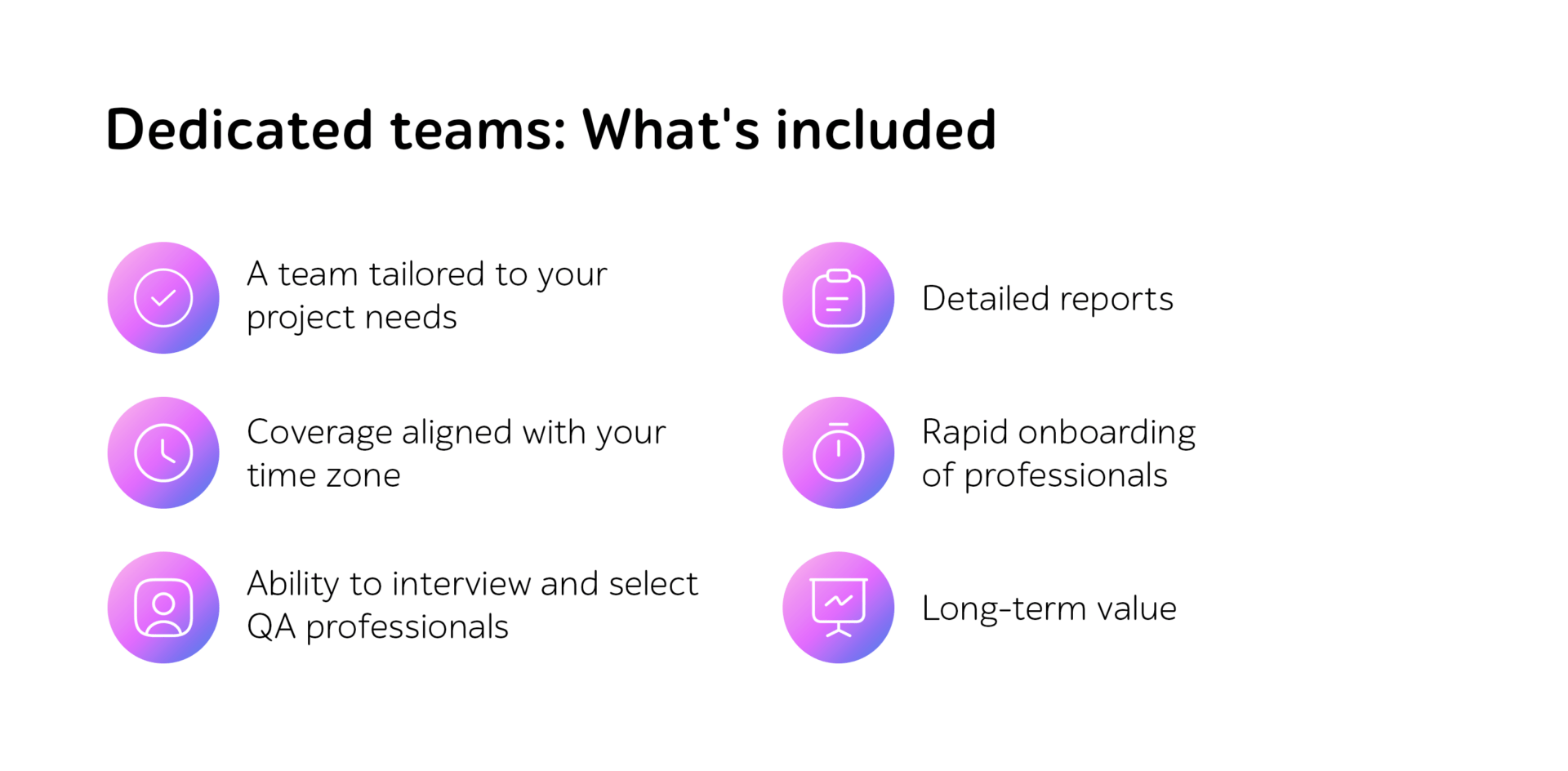

In short, the dedicated team model can help achieve the needed goals and contribute to business success in the market. When working with your team (yes, it is fully yours), you can get a range of benefits:

- Full commitment to your project needs and methodology.

- Adjustment to your time zone.

- Opportunity to interview all specialists.

- Complete control over all project flaws.

- Comprehensive reporting and smooth cooperation.

- Rapid resources onboarding.

- Long-term value through accumulated expertise and knowledge retention.

Regardless of the industry, business need, or software product, you can have a decent crew working most suitably: be it a remote, on-site, or mixed collaboration.

Contact the a1qa experts to get a dedicated team bringing the best possible QA solutions implemented into your business.