With customer expectations escalating and market competition intensifying, ensuring product performance is non-negotiable. 61% of users experience issues related to software operation at least once a day.

Organizations that introduce shift-left performance testing can find critical defects in the application’s functioning early on while accelerating time to release, enhancing QA workflows, and ultimately saving costs.

In this article, we’ll discover why businesses should combine shift-left and performance testing.

The impact of shift-left on software performance optimization

Shift-left testing

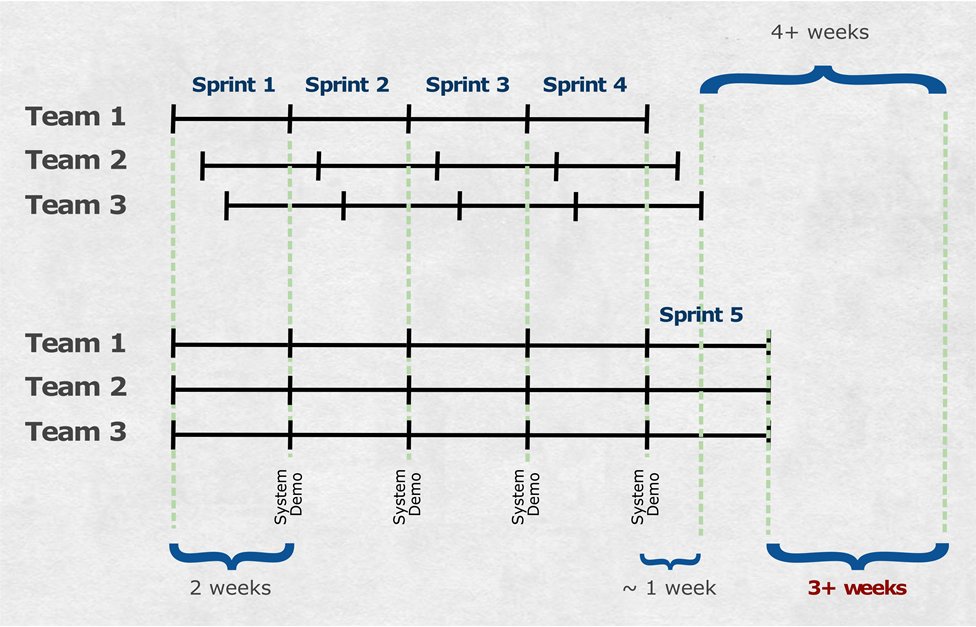

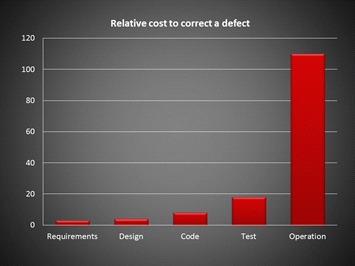

With traditional methodologies, like Waterfall, testing is often moved to the later stages of software development. This leads to several drawbacks. For instance, fixing defects or implementing changes after testing has begun often involves extensive rework and project delays, leading to increased expenses.

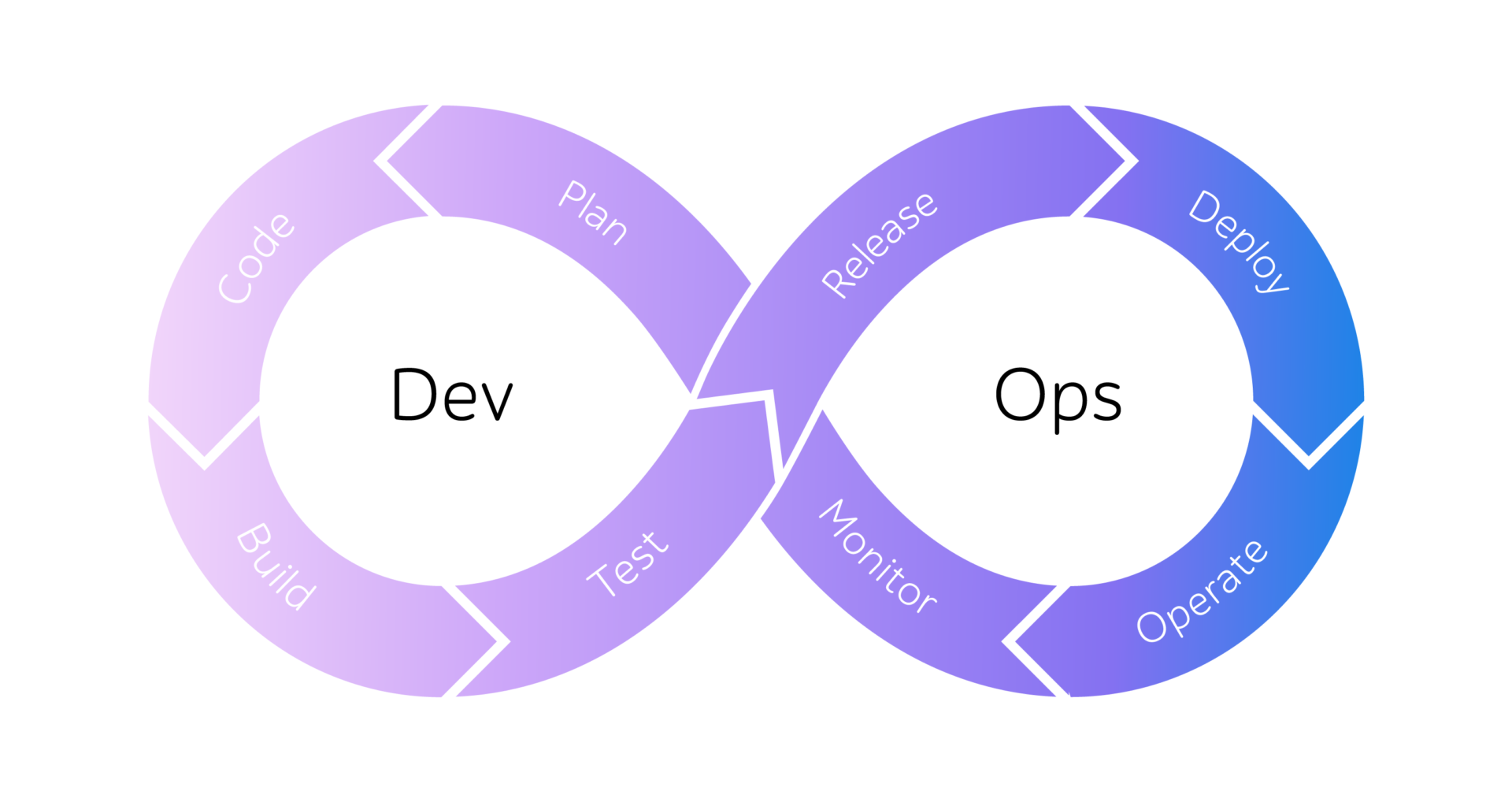

Unlike Waterfall (which still can be in place), a shift-left testing approach advocates for embracing QA activities at the initial SDLC phases. This helps businesses prevent defects, eliminate high costs associated with post-deployment rework, and accelerate the IT solution’s release.

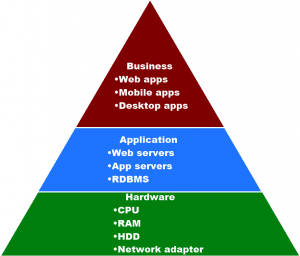

Performance testing

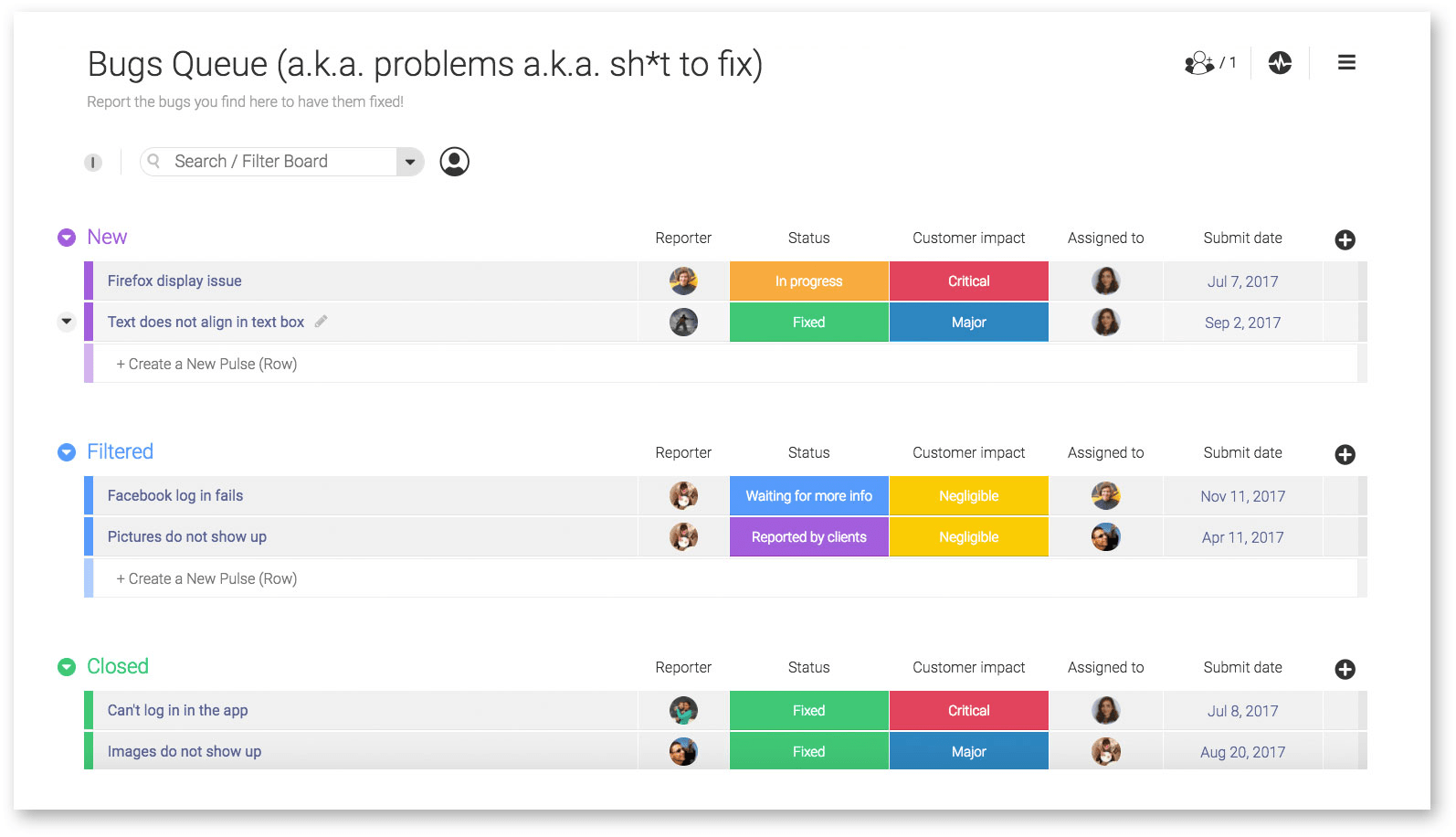

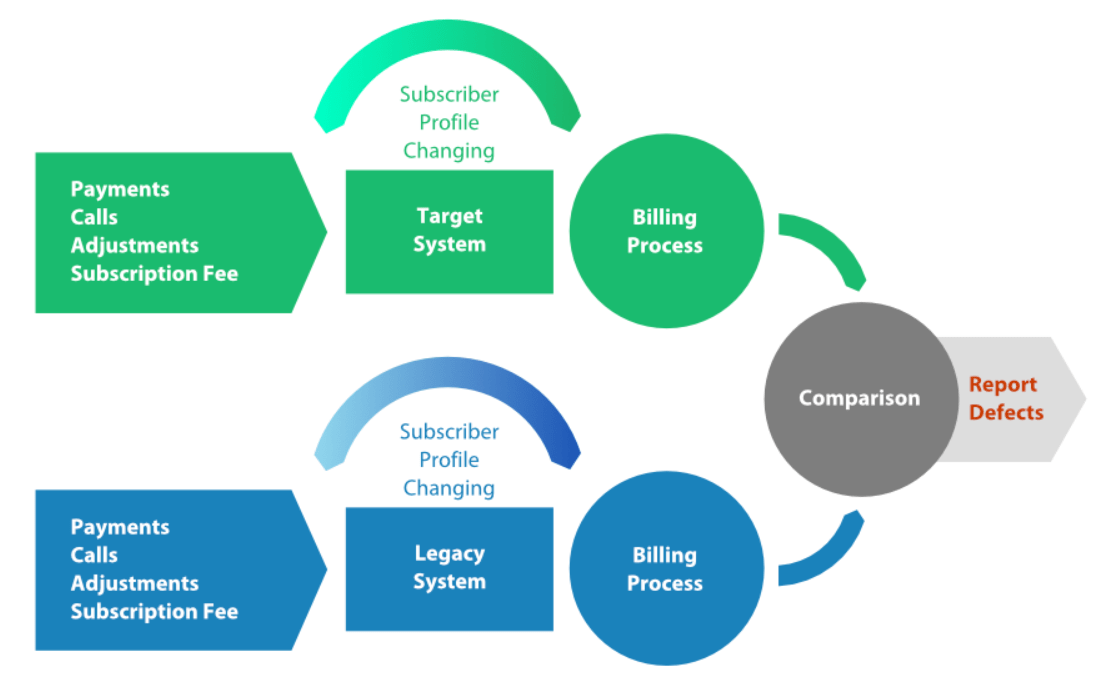

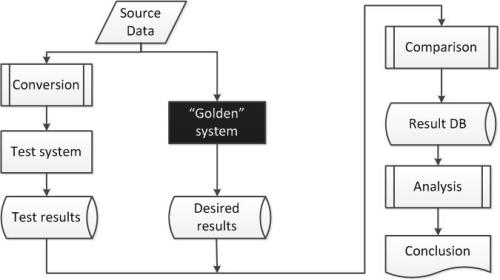

Performance testing focuses on evaluating the responsiveness, scalability, and stability of an application under various conditions. It involves simulating real-world scenarios to assess how the software operates under different loads, involving heavy user traffic or concurrent transactions.

With comprehensive performance checks, companies identify and fix system bottlenecks before they impact user experience and the overall reliability of the IT product.

Why is performance testing relevant within a shift-left approach?

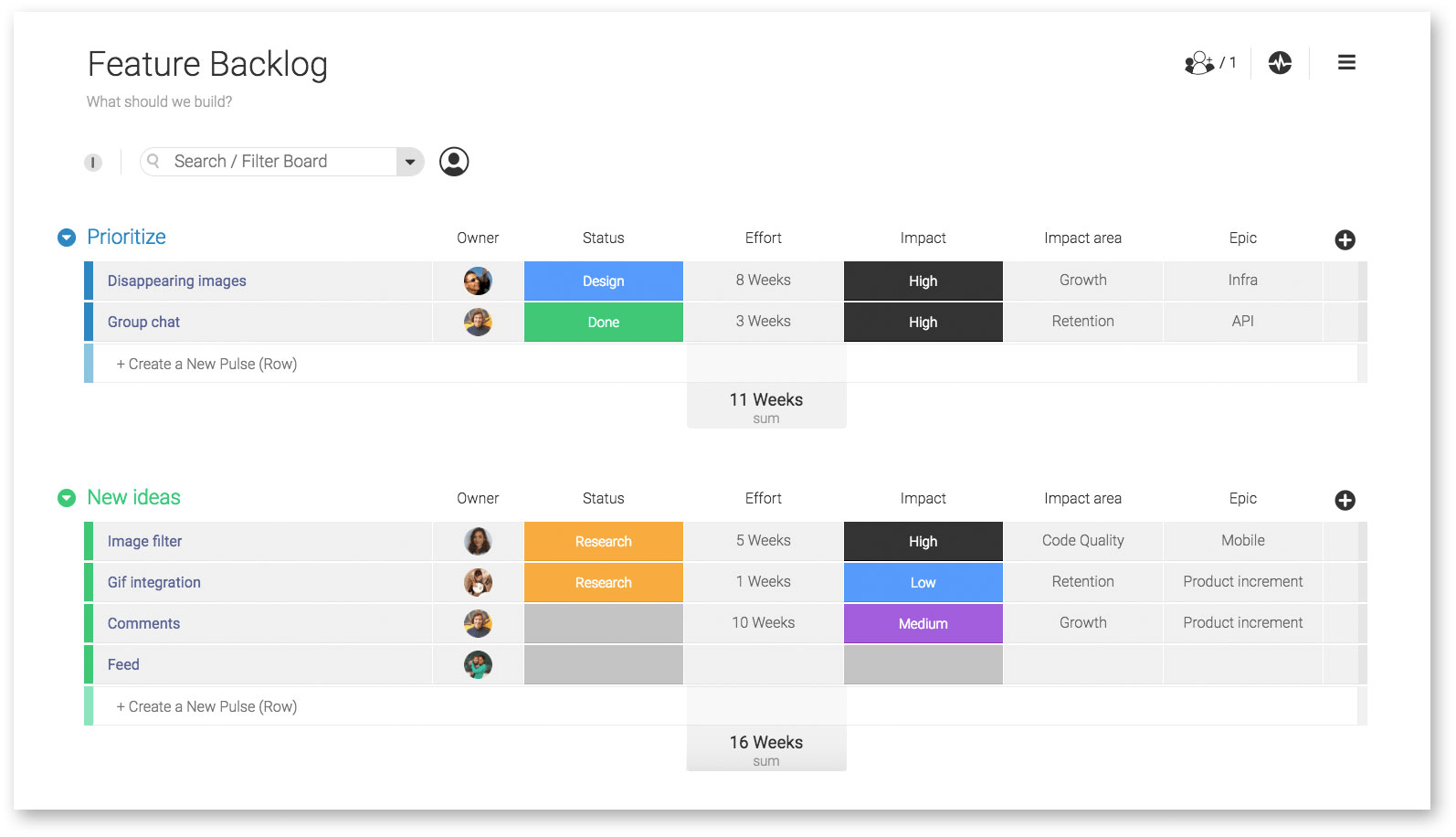

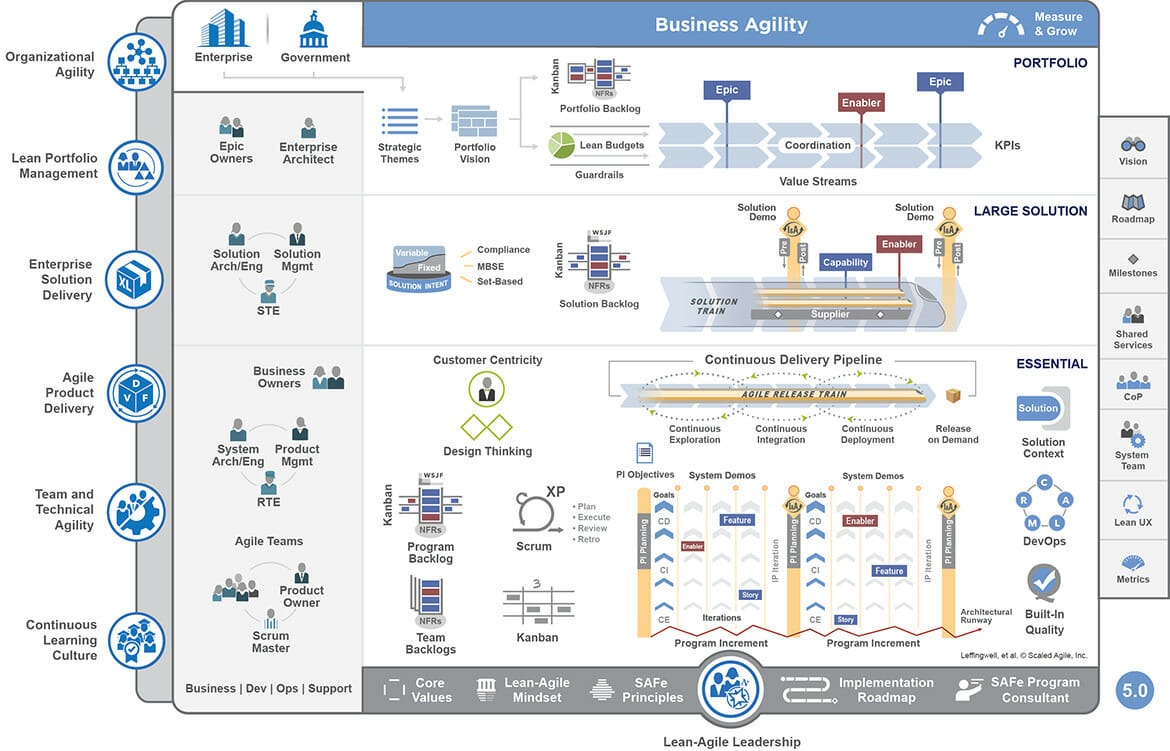

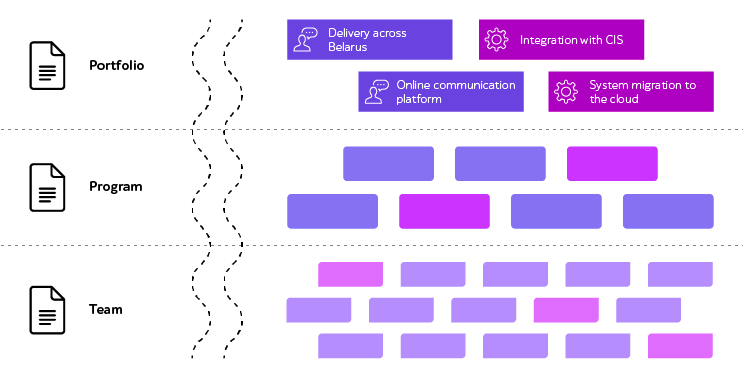

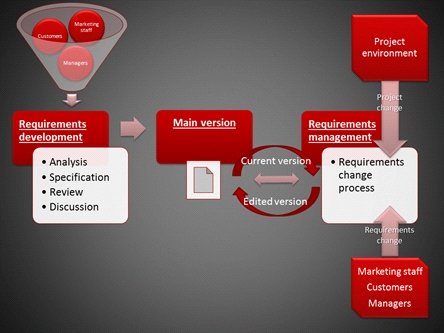

Under the umbrella of shift-left testing, the integration of performance testing emerges as a pivotal component, offering a proactive means to enhance software operation from its inception. Rather than being a mere add-on, it becomes an integral part of the development lifecycle, serving distinct purposes at different stages.

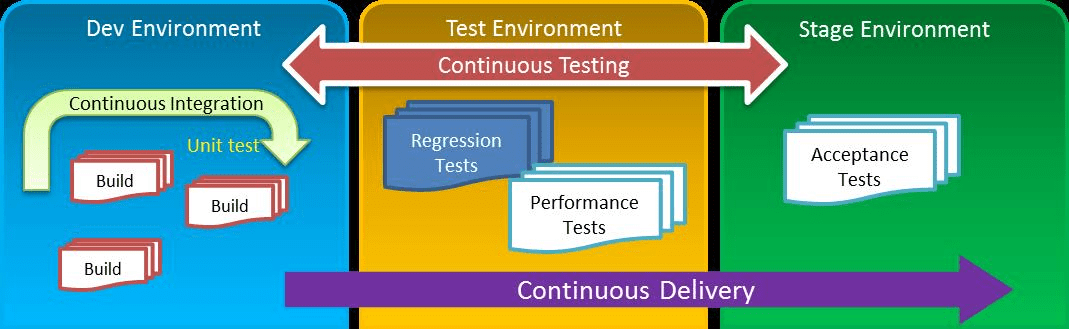

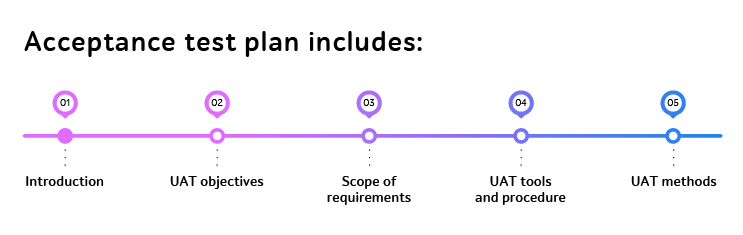

Firstly, companies can implement performance tests into each iteration of the SDLC to assess the performance of individual features, allowing teams to identify and rectify any issues or inefficiencies early on. Secondly, it plays a crucial role in evaluating the overall performance of the system, enabling experts to optimize its architecture and coding practices for better scalability and responsiveness. Adopting performance QA as a part of the CI/CD pipeline allows teams to assess the operation of the system with each build. Finally, performance QA conducted before software release helps ensure that the IT solution meets performance expectations and withstands real usage scenarios without faltering.

Reaping the benefits with shift-left performance testing

Let’s focus on the advantages of performance testing incorporated within a shift-left approach.

- Better software quality due to early detection of performance issues

Let’s imagine that a company is developing a software product. A shift-left performance testing approach will help identify responsiveness bottlenecks, such as delays in UI responsiveness or slow transitions between application screens. For instance, when the application tries to fetch data from the server, these delays may affect end-user interaction. With early and frequent performance checks at the core, companies can address all challenges, predict set timelines, and release a high-quality IT product, driving higher client engagement and satisfaction.

Thus, by integrating performance testing into the initial stages of development, businesses identify potential software issues, like slow responses, scalability concerns, and architectural flaws before they escalate into critical ones.

- Decreased expenditure

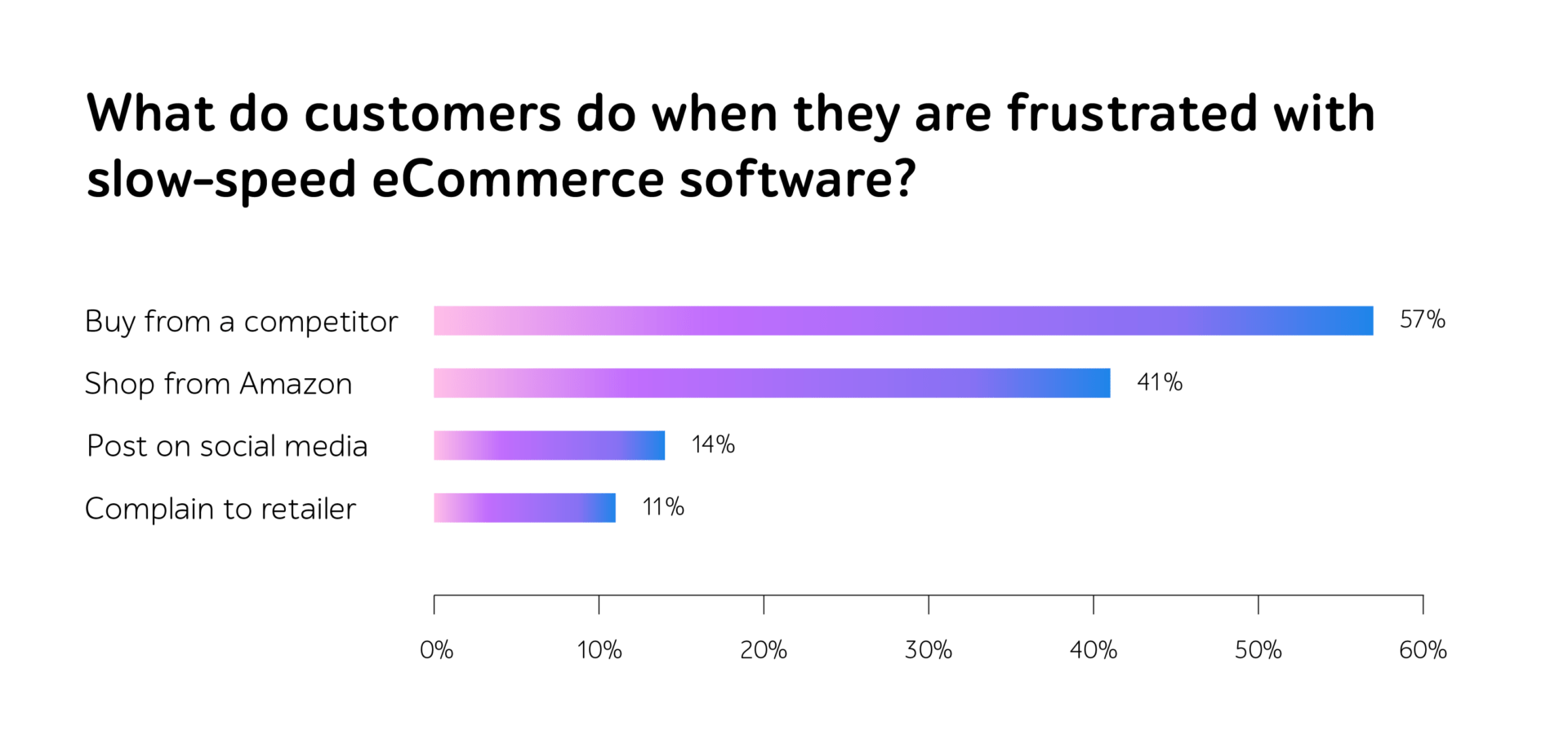

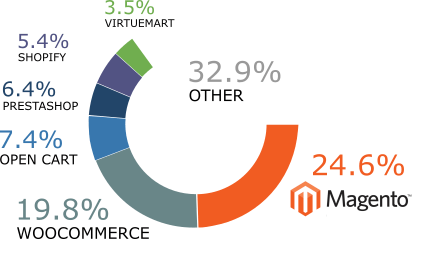

Imagine that an enterprise is preparing an eCommerce website for the shopping season, bringing an influx of clients and a huge profit margin. Postponing it may hit the company’s reputation and finances, right?

Holistic shift-left performance testing equips organizations to mitigate potential issues well in advance of the IT solution release. By integrating this approach into their business strategies, companies avoid the scenario of conducting performance testing as a last-minute check before app launch, which often leaves insufficient time to address any identified bottlenecks. Consequently, they minimize the risk of service disruptions, regulatory penalties, and unexpected expenditures, while ensuring exceptional end-user experiences from the outset.

- Faster time to market

Hastened velocity is achieved through meticulous planning and continuous monitoring of performance throughout the software development lifecycle.

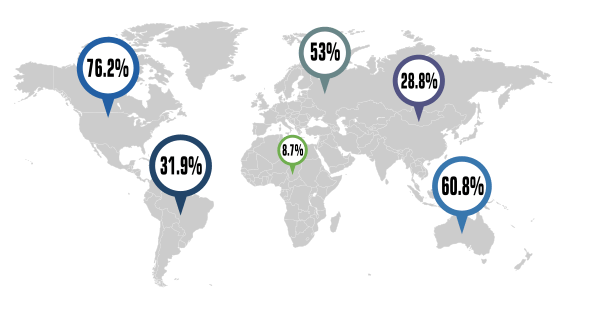

Let’s say a company is creating a cloud application that connects to the server with a huge number of users. By having performance testing built into the SDLC, the team can proactively address issues as they arise, preventing the accumulation of problems that could delay the release.

This ensures that the final performance tests don’t introduce unexpected holdups or require additional time to fix flaws, allowing the company to deliver the app according to schedule and gain a competitive advantage in capturing market share and attracting new customers.

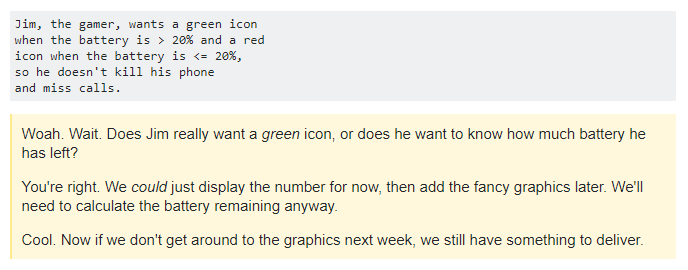

- Refined code quality

By embedding QA activities, such as performance testing, into the early stages of the software development lifecycle, teams cultivate a quality-centric mindset. This encourages developers to consider performance implications from the outset, ensuring that potential issues are identified and addressed before they become ingrained in the codebase. This approach encourages developers to write cleaner and more efficient code from the start, leading to fewer defects, improved maintainability, and enhanced overall system performance.

Additionally, early feedback allows teams to iterate quickly, refine code, and optimize performance, resulting in higher-quality software products delivered to customers.

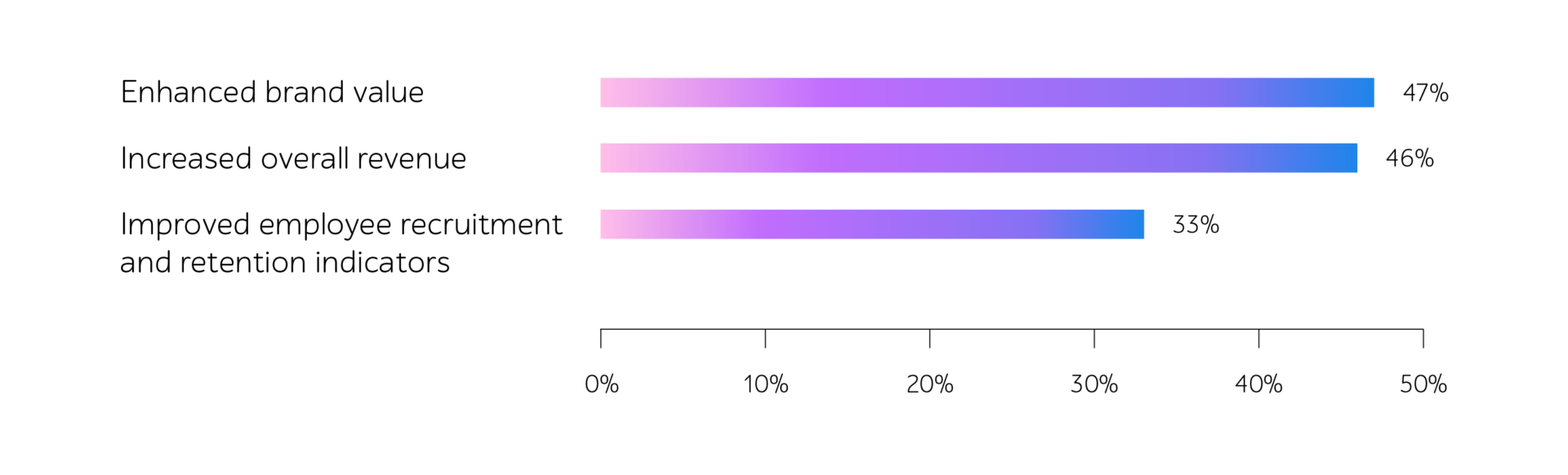

- Improved reputation

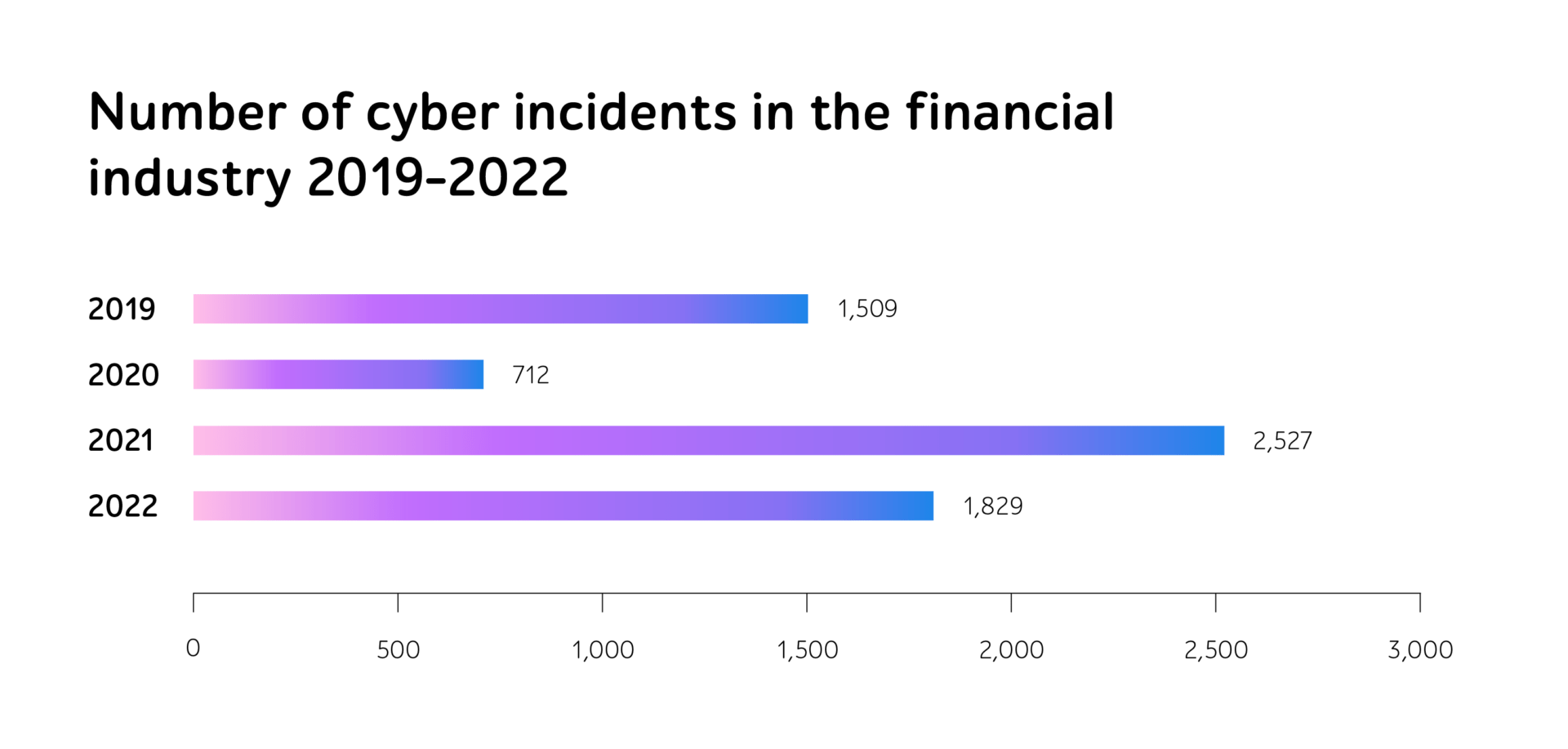

With shift-left performance testing, organizations mitigate the risk of software failures and downtime, thereby enhancing user experience and satisfaction. This commitment to delivering reliable and high-quality IT solutions fosters trust and confidence among customers, strengthening the organization’s reputation and competitive advantage in the marketplace.

Ultimately, a positive brand reputation not only attracts new customers but also cultivates loyalty among existing ones, driving long-term business success and growth.

In brief

Performance testing within a shift-left approach helps organizations strengthen business capabilities and stand out in the IT market.

Among the benefits that companies reap are better software quality, decreased expenditure, faster time to market, refined code quality, and improved reputation.

Want to implement performance testing in the early SDLC stages? Reach out to a1qa’s team for support.