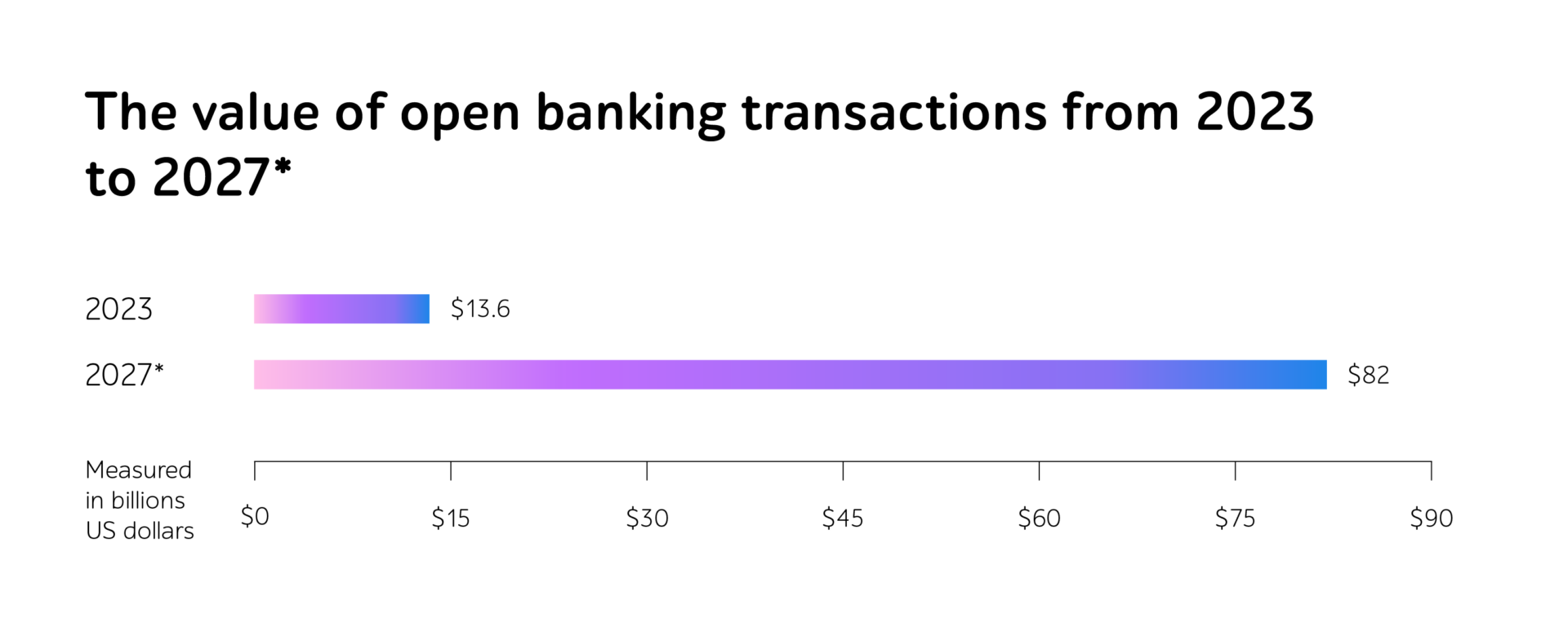

In today’s banking landscape, mobile applications are the frontline of customer engagement. In 2024 McKinsey reports that 92% of banking customers (in Europe and USA) made some sort of digital payment. Mobile apps are becoming the preferred method for everything from checking balances to applying for loans. But with rising customer expectations and growing competition from fintech companies, one question looms large: how can the banking sector move fast without breaking trust?

The answer lies in quality assurance – the invisible backbone enabling banks and fintech to deliver new apps and features rapidly while safeguarding reliability, security, and experience. Done right, QA empowers banks to release confidently, without the negative headlines telling of security breaches and crashes or compliance nightmares.

In this article, let’s analyze the role of software testing in the development of modern banking solutions and dive into strategies helping mitigate release risks, speed up delivery cycles, and build customer loyalty.

Why QA matters in digital banking

It could be argued that banks are no longer just financial institutions; they are also technology companies.

Whether it’s a digital wallet in Singapore or a peer-to-peer payment feature in Frankfurt, customers expect fast, sleek, secure apps – and they want them now. But here’s the challenge: how do you ship new and continuously evolving products while keeping them bug-free, fast, and compliant?

QA provides the safety net. It prevents bugs from slipping into the customer experience, ensures performance under pressure, and confirms regulatory alignment. High-performing engineering teams at banks release features faster, with fewer bugs and better customer outcomes.

And let’s not forget: in a world where switching banks is as simple as downloading a new app, does your current QA strategy give you the competitive edge to keep your customers from jumping ship?

Core QA practices powering modern banking apps

Here are the foundational QA practices that drive quality at scale:

Methods

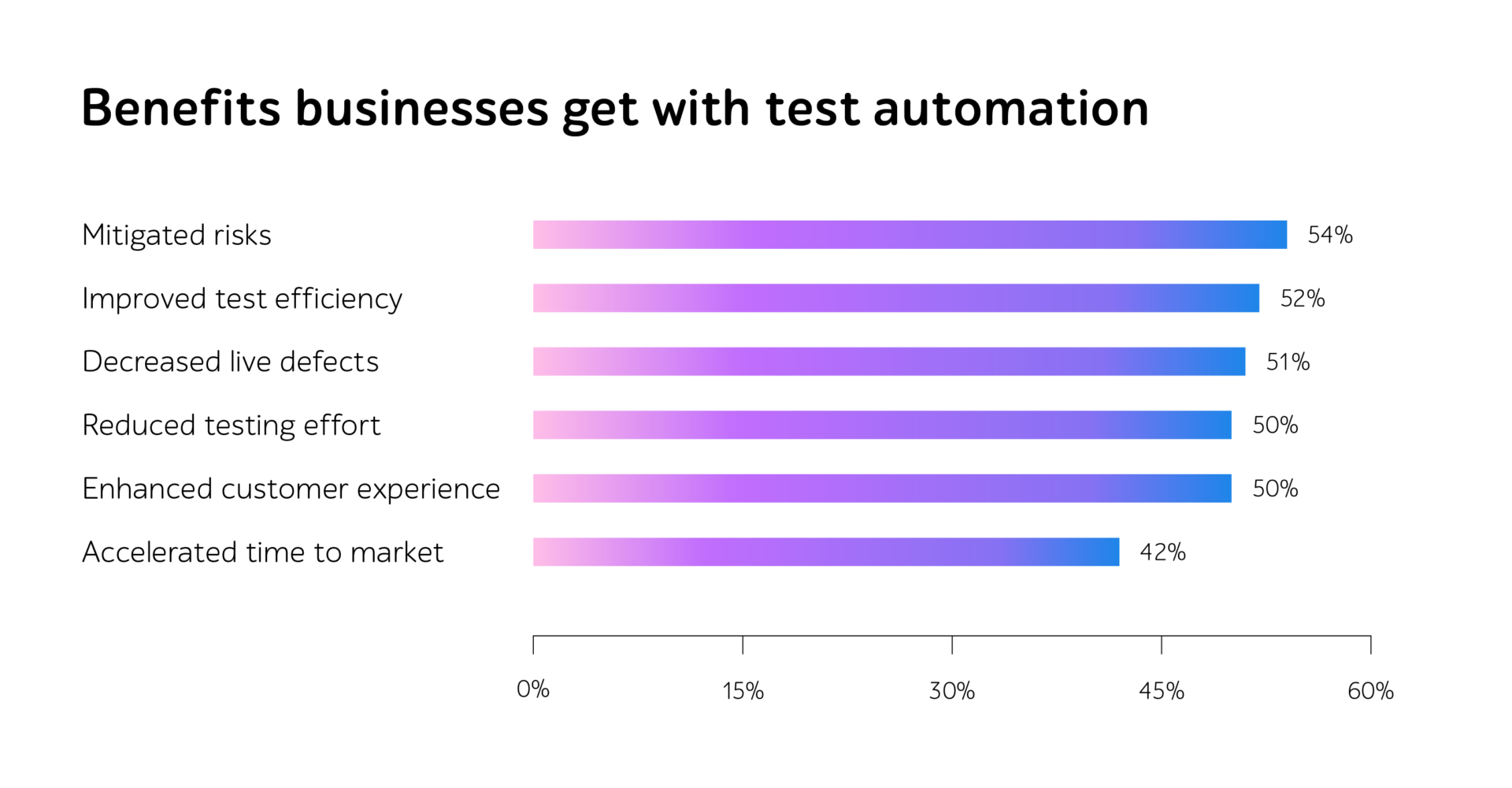

- Test automation: With monthly, weekly, or even daily app updates, automation is essential. It helps validate new features and provides solid regression coverage to keep existing functionality intact. According to Gartner, 60% of companies that automate testing do so to improve quality, and 58% (of the 60%) to accelerate releases.

- Manual exploratory testing: While automation provides coverage, manual testers explore edge cases and user journeys that scripts might miss. Consider a customer who starts a loan application seconds before a session timeout, or while their device is temporarily offline. Will the app preserve their data and recover? Exploratory testing uncovers these unusual conditions, and the insights can later be turned into automated checks.

Types of testing

- Performance testing: Ever had a banking app freeze on payday? Performance testing simulates high-load scenarios – thousands of concurrent logins or transactions – to ensure apps don’t buckle under real-world pressure.

- Security testing: In an era where trust is currency, security testing ensures your app is fortified. It identifies vulnerabilities early – encryption gaps, weak authentication flows – protecting both customers and the brand.

- Functional testing: The baseline check. Every workflow (from sign-in to wire transfers) must behave exactly as the business rules state, even under edge-case conditions.

- Compatibility testing: Banking users span iOS and Android versions, several browsers, and a growing lineup of devices. Compatibility checks keep the experience consistent and glitch-free for everyone.

- Usability testing: A confusing feature is effectively a defect. Real user sessions reveal friction, such as extra taps or unclear labels that erode adoption and satisfaction.

Are you confident your current QA practice already anticipates these moments, or would reinforcing them now help you avoid unwelcome surprises later?

Modern QA practices that are transforming speed and quality

As digital maturity deepens, QA is evolving alongside DevOps and modern software engineering practices. Here’s how leading banks are adapting to stay ahead.

Business value beyond bugs

- AI-supported testing

With growing application complexity, QA teams are using AI-supported tools to improve efficiency and accuracy. They analyze historical data to identify risk areas, suggest test coverage improvements, and detect anomalies faster than manual methods. This is not about replacing human judgment. It is about increasing speed, precision, and confidence. AI-assisted testing helps teams manage faster release cycles without sacrificing quality. - Shift-left testing

In traditional setups, testing happens after the build is complete. But by then, issues are more costly to fix. Shift-left testing introduces quality checks during planning, design, and development. Involving QA early allows teams to clarify requirements and avoid misaligned expectations. This approach reduces delays, improves collaboration, and is especially effective when managing regulatory compliance, complex workflows, or third-party integrations. Sometimes, the biggest risk is not a bug in the code, but a misunderstanding in the requirements. - Testing in production (as part of DevOps)

Some problems only appear under real-world conditions with live users and data. This is where testing in production, or “shift-right” practices, becomes valuable. Banks are increasingly adopting canary releases, feature flags, and real-time monitoring to validate new functionalities with limited exposure. This is not recklessness. It is a mature DevOps approach backed by observability tools, alerts, and rollback options. It enables fast, safe experimentation. Modern QA practices focus on embedding quality throughout the software lifecycle. From early planning to live production, these approaches help banks release faster while reducing risk.

Quality assurance is more than just catching bugs; it directly contributes to business growth and innovation.

Key benefits include:

- Faster releases: Shortened testing cycles accelerate time-to-market.

- Fewer defects: Structured QA and real-user testing significantly reduce production defects.

- Increased productivity: Streamlined testing processes create greater development capacity.

- Enhanced customer trust: Reliable, bug-free experiences encourage customer loyalty.

- Improved regulatory compliance: QA ensures alignment with standards, reducing compliance risks and costs.

However, many organisations still view QA as simply a final step in the development cycle, which limits its effectiveness. A more strategic approach includes:

- Investing in realistic test data to accurately replicate production scenarios.

- Establishing QA Centres of Excellence to unify practices and tools across teams.

- Integrating real-time monitoring and alerts for rapid issue detection and response.

- Using customer insights to prioritise testing efforts effectively.

Ultimately, effective QA is not only about preventing bugs. It enables innovation, accelerates delivery, and empowers teams to confidently pursue opportunities without fear of failure.

Final thought: Is your QA strategy helping or holding you back?

Successful banks deliver reliable apps quickly by embedding QA strategically throughout their processes. They leverage AI-supported testing, shift testing earlier in development, and proactively monitor production environments. A strategic QA approach ensures consistent quality, regulatory compliance, and customer trust, enabling banks to innovate with confidence.

Consider your own approach:

- Is your QA integrated early enough to prevent costly issues rather than reacting to them?

- Do your teams have the right tools and clear priorities to maintain both speed and quality?

- Are you proactively identifying risks and customer needs, or waiting for issues to appear?

- Does your QA strategy actively support innovation, or does it hold your teams back?

If any of these questions raise concerns, it’s time to rethink your QA practices. In digital banking, quality defines reputation. Speed without quality is simply too great a risk.

Get in touch to learn how we can help you move faster without compromising quality.