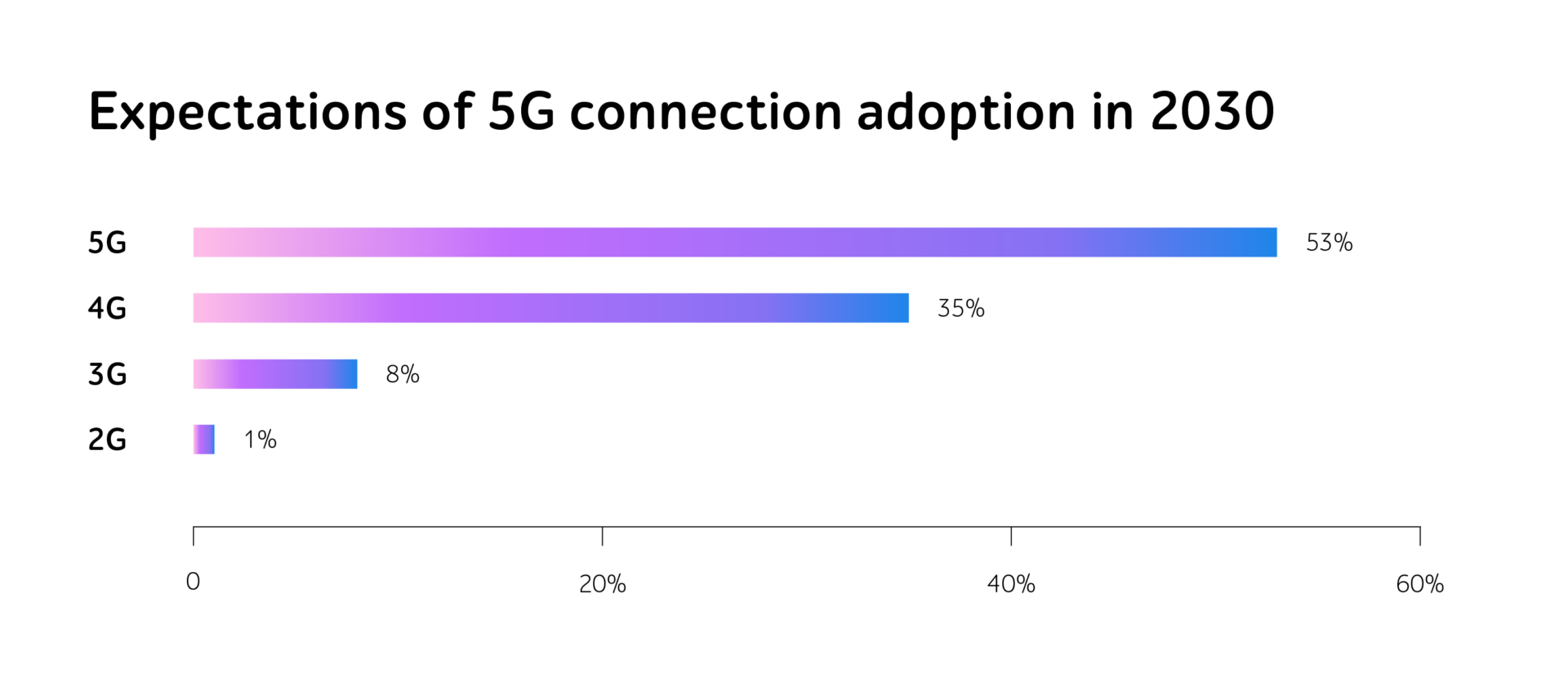

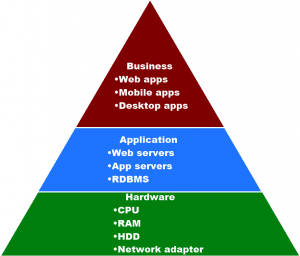

Digital products do not only operate in the pristine lab environment where code functions perfectly. Users access software via damaged screens, outdated browsers, unstable 3G connections, and customized OS skins. One release should survive thousands of these chaotic combinations.

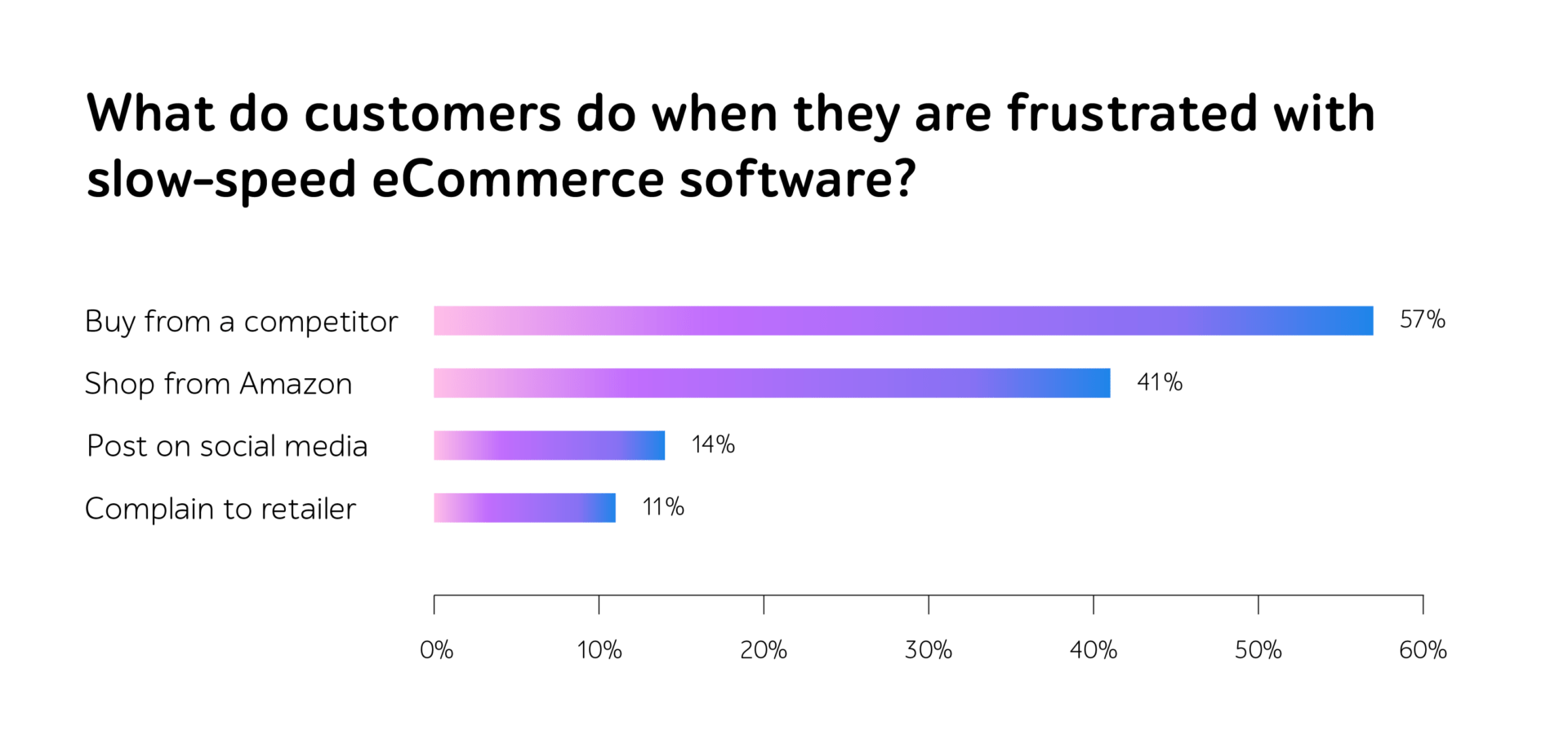

Ignoring this variance leads to failed logins, abandoned checkouts, and overloaded support channels. Global cart abandonment rates sit at nearly 70 percent, and industry data indicates that approximately 17 percent of those users leave specifically due to technical errors.

This is where compatibility testing becomes essential by verifying that a product functions correctly in the fragmented environments of real users.

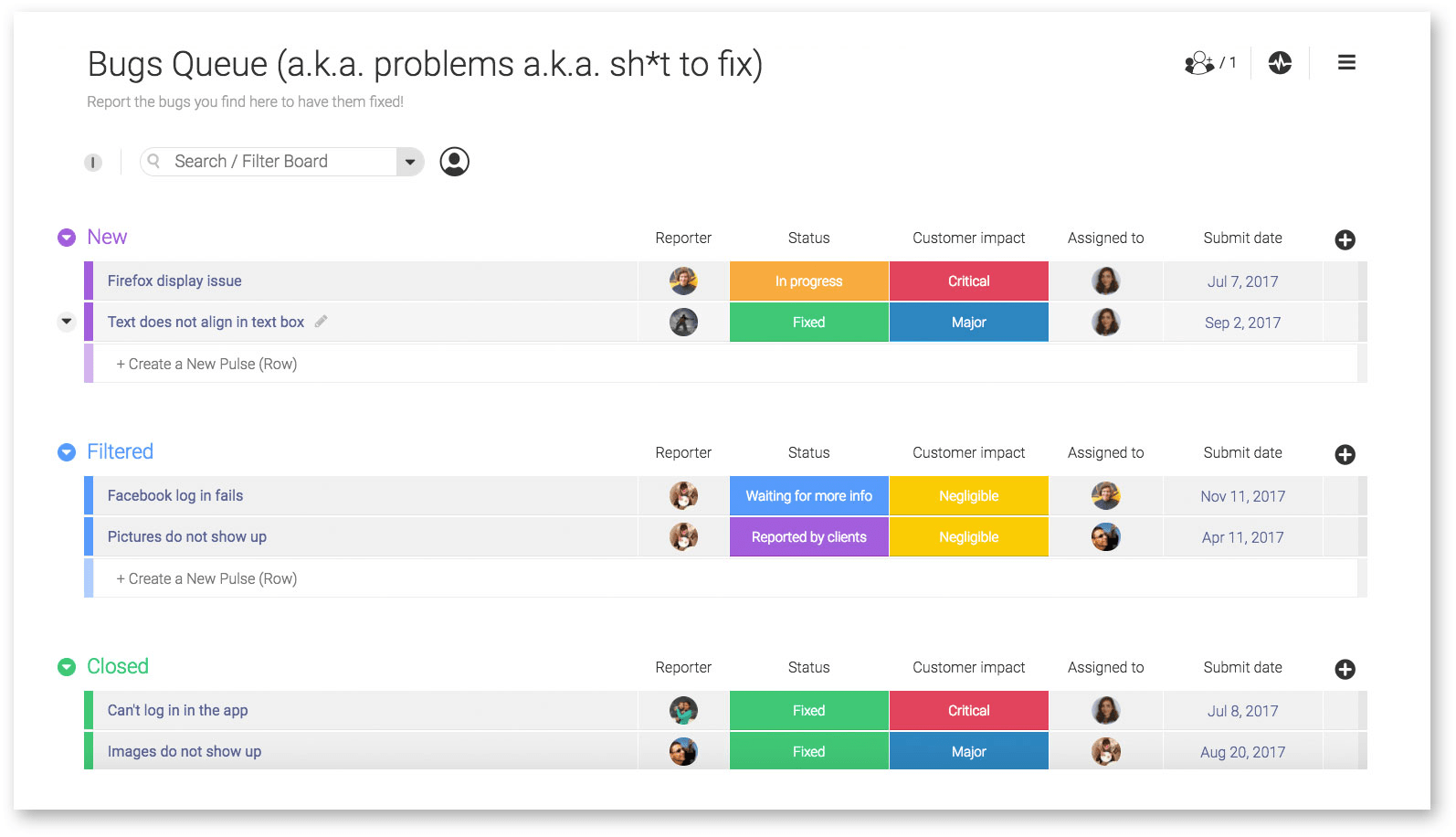

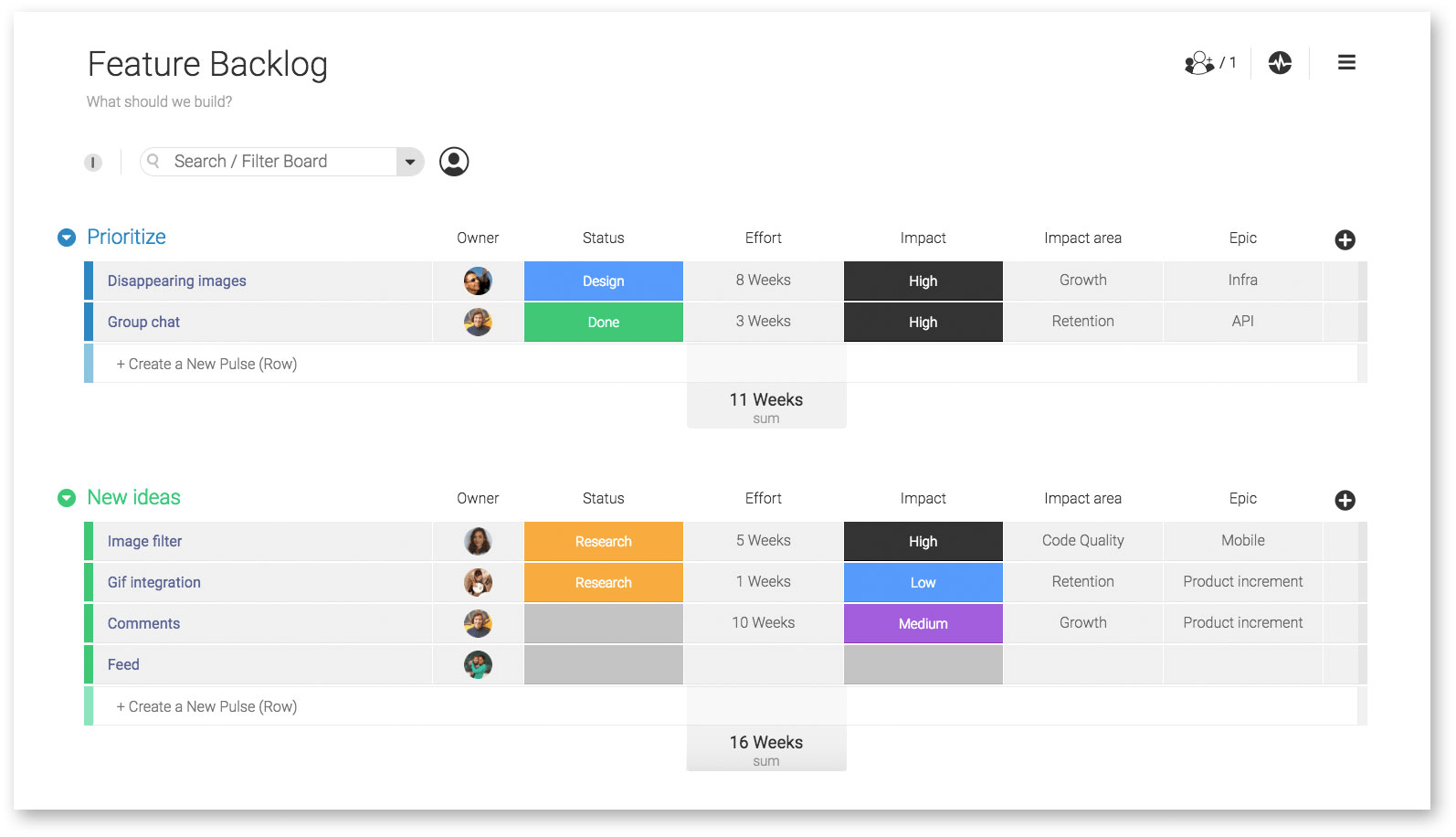

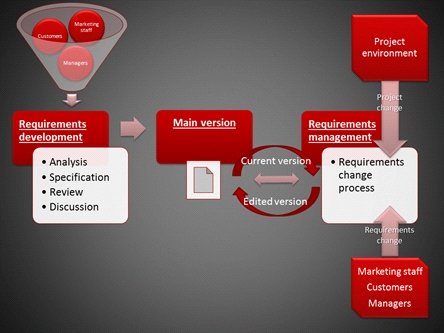

To make that complexity manageable at scale, teams rely on a compatibility matrix that acts as a comprehensive risk map for the project.

The compatibility matrix

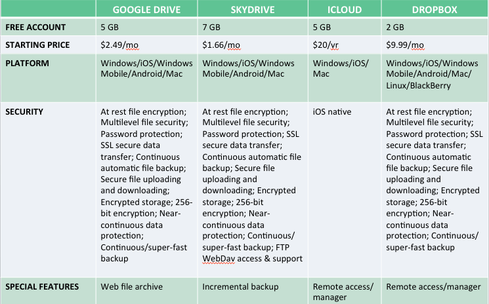

A compatibility matrix is a simple table that lists the device, browser, operating system, and network combinations you commit to support, ordered by how important they are for your users and revenue.

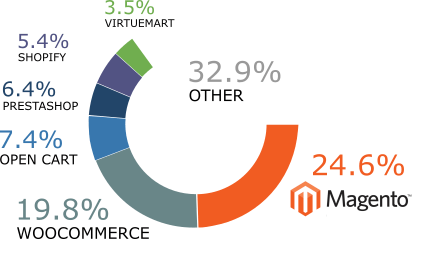

Since testing every combination is impossible, teams should use analytics to identify their main revenue drivers and structure testing across layers such as:

- Cross-browser: can customers complete key journeys on the primary desktop and mobile browsers in use?

- Cross-platform: does the layout remain usable and consistent across the main operating systems in the customer base?

- Device capabilities: do features that rely on hardware, such as cameras or sensors, interact correctly with the operating system on different device types?

If analytics show that, for example, 70 percent of revenue comes from mid-range Android devices using a particular browser, those combinations become top priority. Other environments receive best-effort status. This approach creates a shared, explicit definition of “supported” before any testing begins.

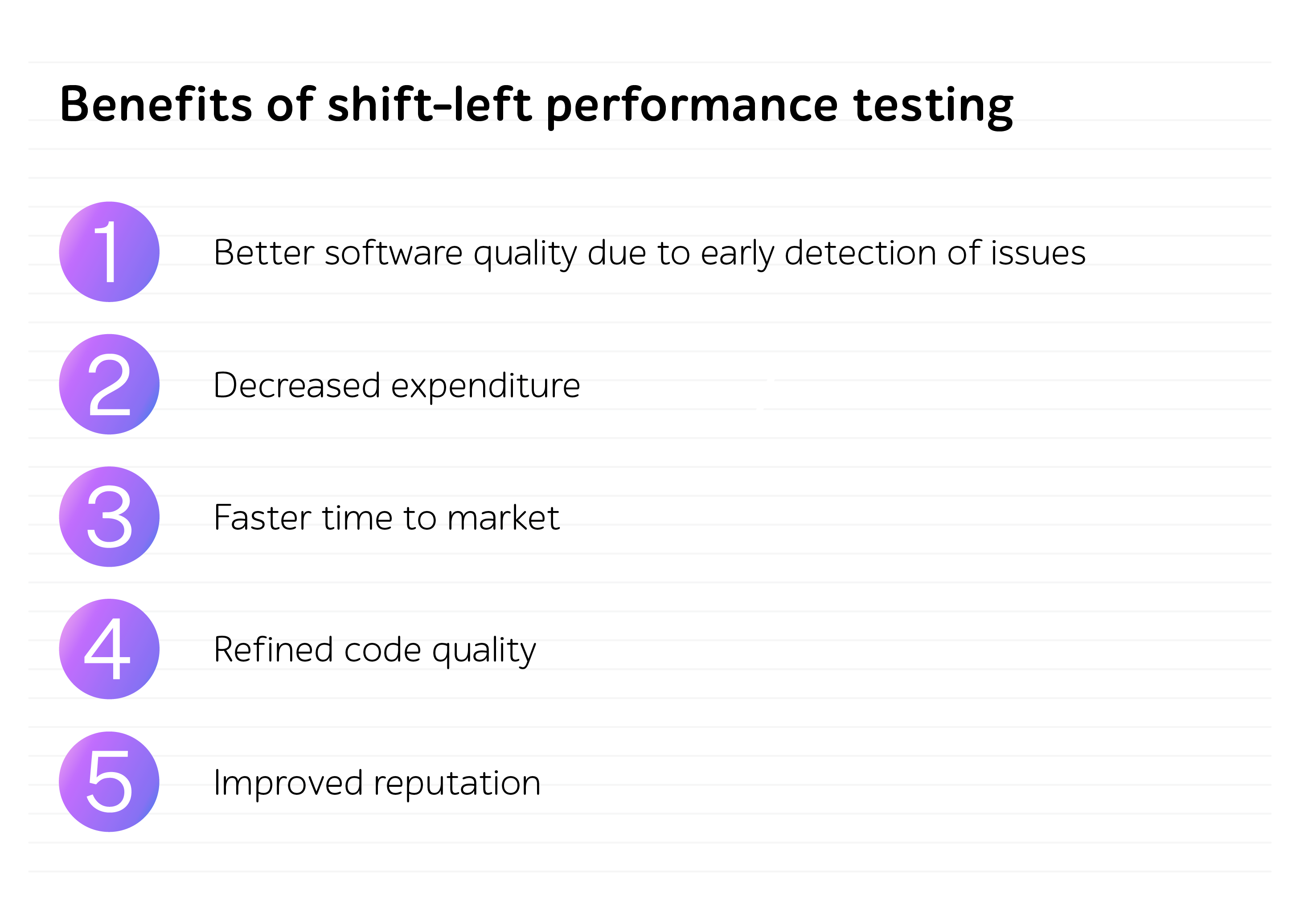

With that foundation in place, a disciplined compatibility testing strategy delivers six practical benefits for the business:

1. Stopping surprises before launch

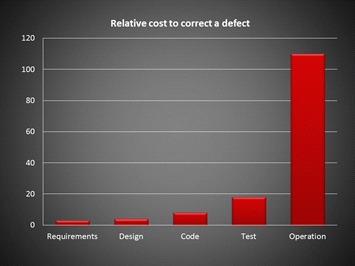

Launch day is the least optimal time to discover a defect.

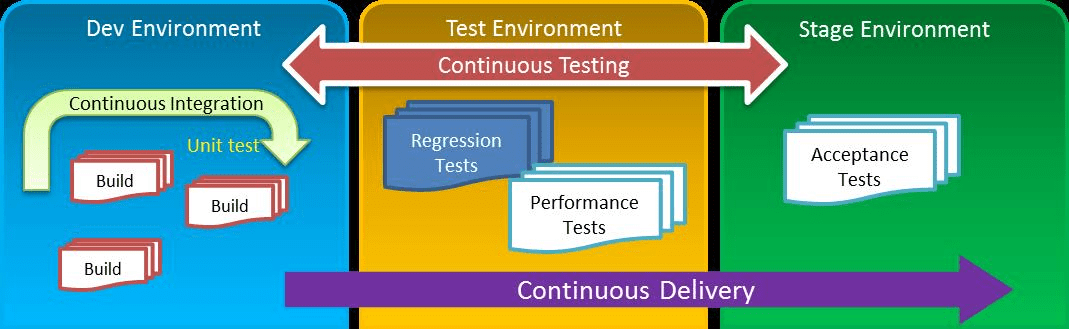

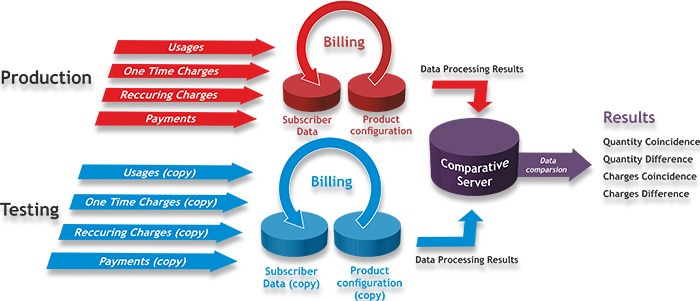

If a bug slips through, it often forces a delayed release or a risky hotfix. Teams should adopt a shift-left approach to compatibility, moving key checks earlier in the lifecycle so high-risk devices, browsers, and networks are exercised as soon as a feature is ready in a test environment. Instead of waiting for a final test phase, these environments are built into build pipelines and story acceptance criteria, so issues appear while the code is still being written and adjusted.

This avoids last-minute scrambles to fix broken checkout buttons or layout issues on high-traffic devices and browsers just hours before going live. Instead, engineers pick up and resolve these problems calmly during a standard sprint, before the release date is at ris

2. Reducing operational firefighting

Production bugs consume valuable resources.

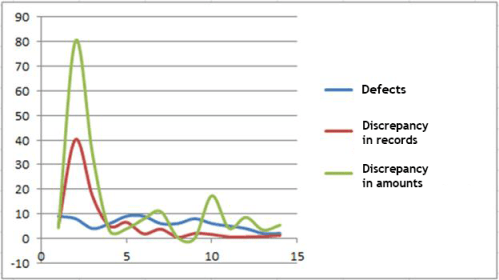

When issues leak into production, support and product teams burn hours trying to reproduce reports, checking screenshots, and working out whether the problem is user error, an unsupported setup, or a genuine defect.

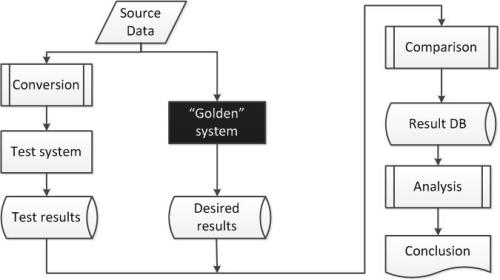

Structured compatibility testing reduces this noise. By exercising the priority combinations from the compatibility matrix before release, teams catch environment-specific failures, such as login flows that break on certain browser versions or journeys that stall under slow networks, before customers ever see them.

As a result, support queues shrink, engineering spends more time on the roadmap than on one-off hotfixes, and the organisation moves from constant firefighting to a more predictable delivery rhythm.

3. Safeguarding brand reputation

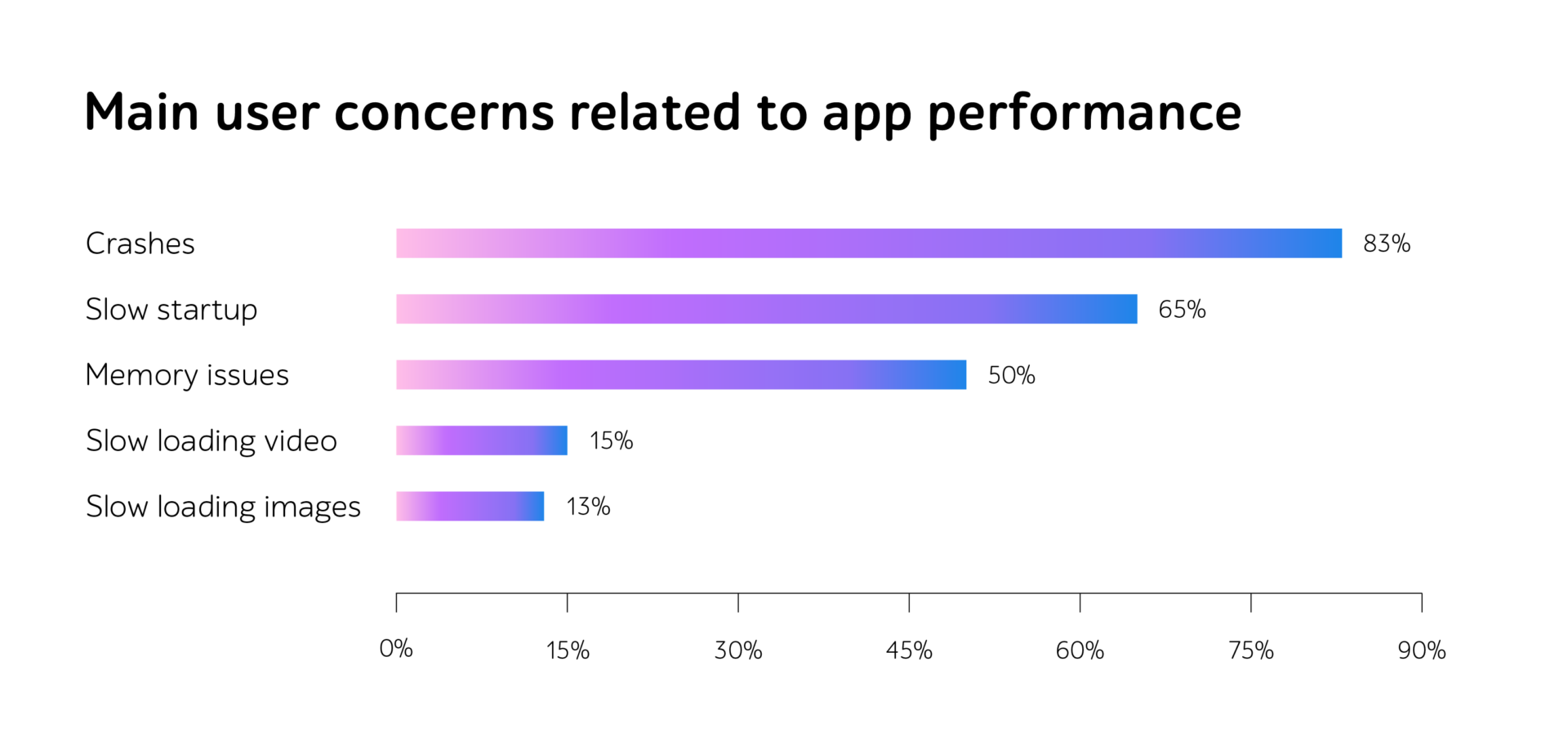

Consumers possess little patience for fragility. However, the issue is not always a visible crash.

With thousands of Android models in circulation, the same app can behave very differently in the wild. Aggressive battery-saving policies may silently kill background processes, lower end devices may unload apps under memory pressure, and older OS versions may not fully support modern web components. To the user, these behaviours look like random crashes, logouts, or “frozen” screens, even though the device is simply trying to conserve resources.

With testing, teams can implement foreground services to maintain app stability. This protects the brand promise on every device.

4. Protecting revenue streams

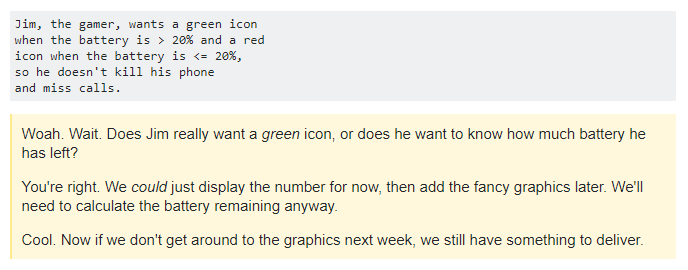

A crash is obvious. Defects, such as pages that never finish loading or buttons that appear but do nothing in certain environments, simply look like customers losing interest and leaving.

In practice, funnel analysis may show that drop off suddenly increases for a particular slice of the audience, such as mobile users, older devices, or a specific region. Structured compatibility testing against the agreed matrix helps teams replay those journeys, uncover usability or behaviour issues that block progress, and restore performance for that segment once they are fixed. This is one of the most direct ways compatibility testing protects existing revenue streams.

More broadly, teams should routinely verify that critical paths such as sign up, authentication, account access, search and payment work end to end across their priority environments, so that high-value customers are not quietly blocked by technical friction.

5. Speeding up delivery

Speed is critical. A perfect release offers little value if it arrives months late.

While some fear that testing slows development, modern strategies actually accelerate it.

Techniques like parallel execution and sharding allow teams to run automated suites across dozens of virtual devices overnight. This replaces the manual effort of testing on physical devices for days.

A well-matched test setup makes this possible. With enough capacity to run suites side by side, teams surface issues earlier, cut rework, and turn compatibility testing into a driver of faster releases and consistent user experience across their priority environments.

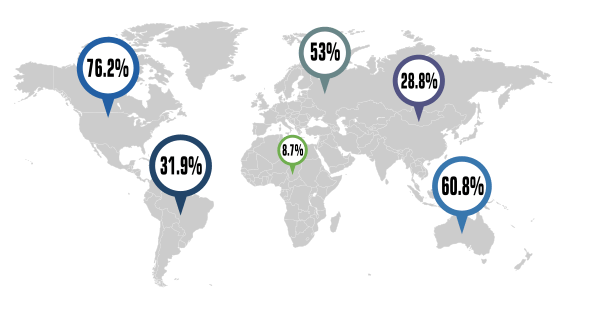

6. Securing market expansion

Compatibility testing is especially important when a business moves into unfamiliar environments, such as new regions, new channels, or new platform partnerships. It helps teams confirm that core journeys still work when device mix, network quality, or browser behaviour look very different from the home market.

New and emerging markets often present harsher technical conditions. Expansion plans need to account for older or low specification devices, patchy or congested networks, aggressive data saving settings, and intermediary services such as proxy browsers or corporate gateways that modify traffic in transit. These factors can quietly break journeys that looked stable in lab conditions.

For example, a company launching a new digital service into a bandwidth constrained region might find that sign up or payment journeys regularly time out on common Android handsets over 3G. Focused compatibility testing across representative devices and networks allows teams to spot these bottlenecks early, reduce payload size, strengthen offline and retry behaviour, and confirm that critical flows still succeed before committing budget to a full market launch.

Conclusion

Compatibility testing turns a chaotic ecosystem into a controlled business discipline.

With a clear compatibility matrix and the right mix of real devices, networks, and automation, teams remove guesswork from releases, cut rework, and keep critical journeys open for every high-value segment.

Instead of reacting to incidents after launch, organisations can release with confidence, knowing that sign up, verification, and payment work as intended where it matters most. This protects revenue, brand, and customer trust.

Lab checks alone are no longer enough. Products must prove they can withstand the real conditions your customers live and work in.

Contact a1qa specialists to review your current approach and design a compatibility strategy tailored to your customer base.